FILES

CITATION

A.W. Bergman, D.B. Lindell, & G. Wetzstein, “Deep Adaptive LiDAR: End-to-end Optimization of Sampling and Depth Completion at Low Sampling Rates”, IEEE Int. Conference on Computational Photography (ICCP), 2020.

BibTeX

@article{Bergman:2020:DeepLiDAR,

author={Alexander W. Bergman and David B. Lindell and Gordon Wetzstein},

journal={Proc. IEEE ICCP},

title={{Deep Adaptive LiDAR: End-to-end Optimization of Sampling and Depth Completion at Low Sampling Rates}},

year={2020},

}

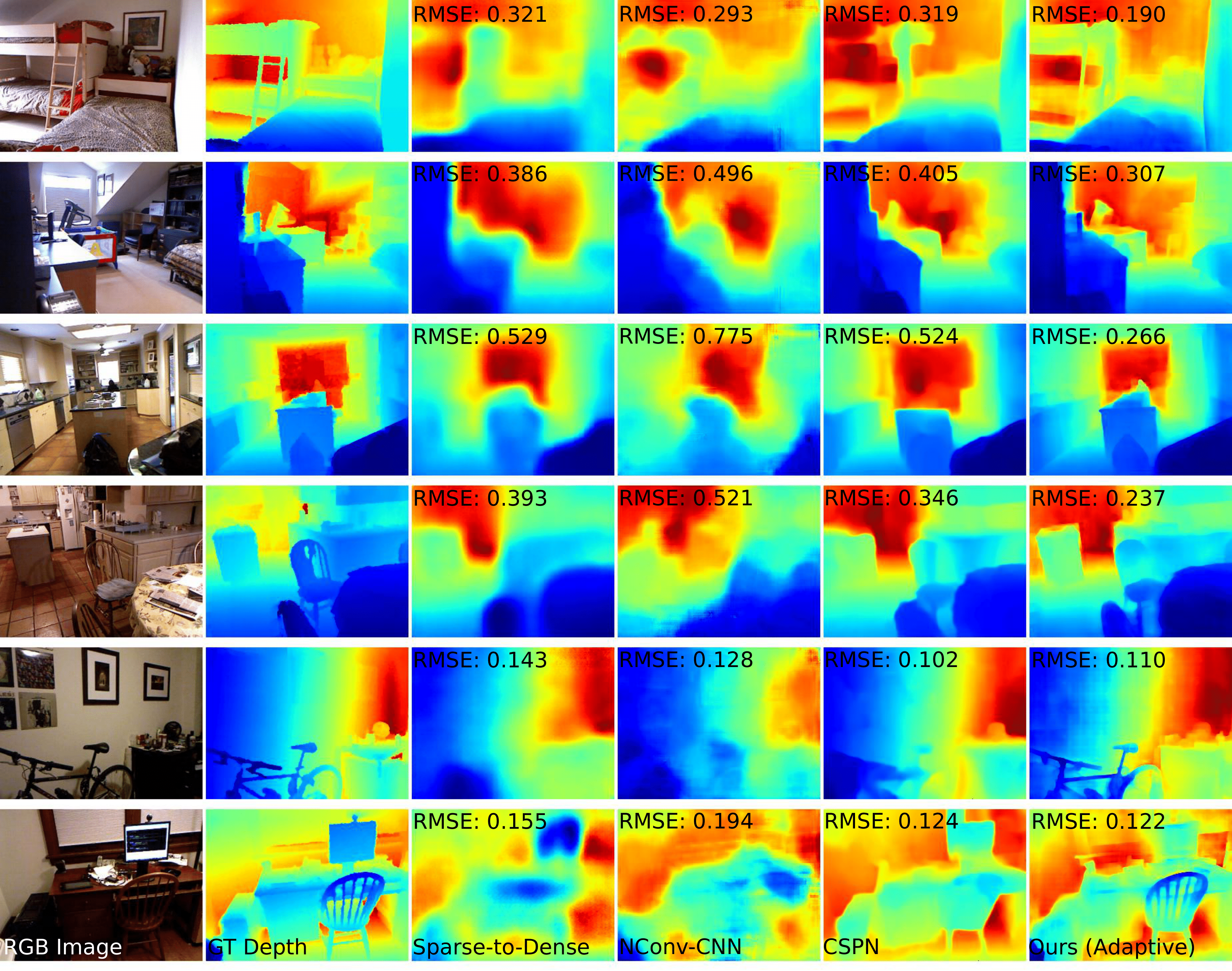

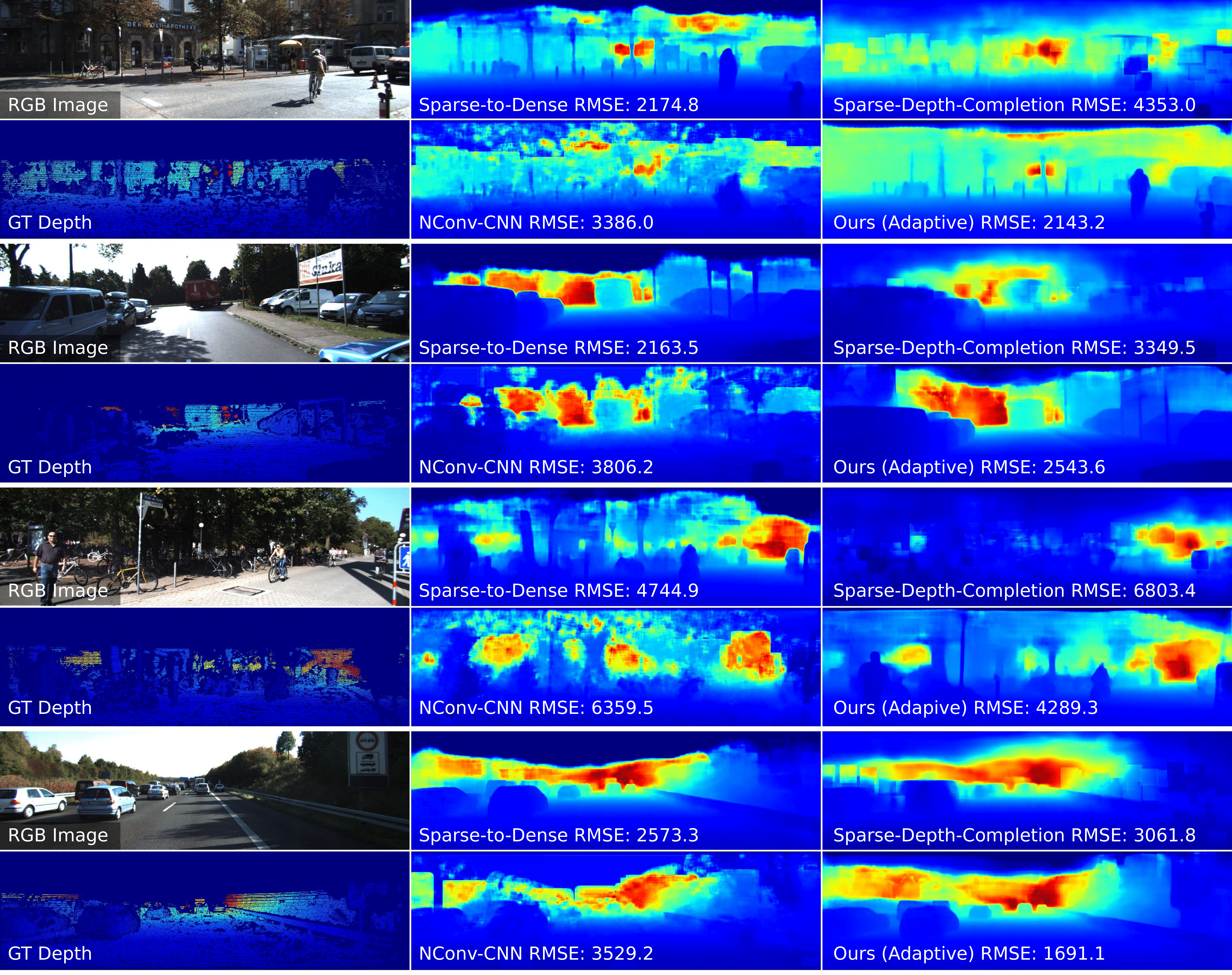

DEPTH COMPLETION RESULTS

Depth completion error with different methods for various datasets. Lowest errors are bolded. For the NYU-Depth-v2 dataset, methods are evaluated on the test set using an average of 50 samples per image, and RMSE is measured in meters. For the KITTI dataset, methods are evaluated on the validation set using an average of 156 samples per image, and RMSE is measured in millimeters.

| NYU-Depth-v2 Depth Completion Method |

RMSE |

MAE |

| Sparse-to-Dense [1] |

0.311 |

0.191 |

| CSPN [2] |

0.258 |

0.143 |

| NConv-CNN [3] |

0.395 |

0.231 |

| Ours (Random Samples) |

0.274 |

0.177 |

| Ours (Adaptive Samples) |

0.233 |

0.138 |

| KITTI Depth Completion Method |

RMSE |

MAE |

| Sparse-to-Dense (gd) [4] |

2060.9 |

728.4 |

| Sparse-Depth-Completion [5] |

3182.7 |

1287.3 |

| NConv-CNN-L2 [3] |

3521.9 |

1414.5 |

| Ours (Random Samples) |

2401.9 |

856.1 |

| Ours (Adaptive Samples) |

2048.0 |

757.1 |

[1] F. Ma and S. Karaman, “Sparse-to-dense: Depth prediction from sparse depth samples and a single image,” ICRA, 2018.

[2] X. Cheng, P. Wang, and R. Yang, “Depth estimation via affinity learned with convolutional spatial propagation network,” in ECCV, 2018.

[3] A. Eldesokey, M. Felsberg, and F. Khan, “Confidence propagation through cnns for guided sparse depth regression,” IEEE PAMI, 2019.

[4] F. Ma, G. V. Cavalheiro, and S. Karaman, “Self-supervised sparseto-dense: Self-supervised depth completion from lidar and monocular camera,” ICRA, 2019.

[5] W. Van Gansbeke, D. Neven, B. De Brabandere, and L. Van Gool, “Sparse and noisy lidar completion with rgb guidance and uncertainty,” in 2019 16th International Conference on Machine Vision Applications (MVA), 2019.

Related Projects

You may also be interested in related projects, where we have developed non-line-of-sight imaging systems:

- Metzler et al. 2021. Keyhole Imaging. IEEE Trans. Computational Imaging (link)

- Lindell et al. 2020. Confocal Diffuse Tomography. Nature Communications (link)

- Young et al. 2020. Non-line-of-sight Surface Reconstruction using the Directional Light-cone Transform. CVPR (link)

- Lindell et al. 2019. Wave-based Non-line-of-sight Imaging using Fast f-k Migration. ACM SIGGRAPH (link)

- Heide et al. 2019. Non-line-of-sight Imaging with Partial Occluders and Surface Normals. ACM Transactions on Graphics (presented at SIGGRAPH) (link)

- Lindell et al. 2019. Acoustic Non-line-of-sight Imaging. CVPR (link)

- O’Toole et al. 2018. Confocal Non-line-of-sight Imaging based on the Light-cone Transform. Nature (link)

and direct line-of-sight or transient imaging systems:

- Bergman et al. 2020. Deep Adaptive LiDAR: End-to-end Optimization of Sampling and Depth Completion at Low Sampling Rates. ICCP (link)

- Nishimura et al. 2020. 3D Imaging with an RGB camera and a single SPAD. ECCV (link)

- Heide et al. 2019. Sub-picosecond photon-efficient 3D imaging using single-photon sensors. Scientific Reports (link)

- Lindell et al. 2018. Single-Photon 3D Imaging with Deep Sensor Fusions. ACM SIGGRAPH (link)

- O’Toole et al. 2017. Reconstructing Transient Images from Single-Photon Sensors. CVPR (link)

ACKNOWLEDGEMENTS

A.W.B. and D.B.L. are supported by a Stanford Graduate Fellowship in Science and Engineering. This project was supported by a Terman Faculty Fellowship, a Sloan Fellowship, a NSF CAREER Award (IIS 1553333), the DARPA REVEAL program, the ARO (ECASE-Army Award W911NF19-1-0120), and by the KAUST Office of Sponsored Research through the Visual Computing Center CCF grant.