ABSTRACT

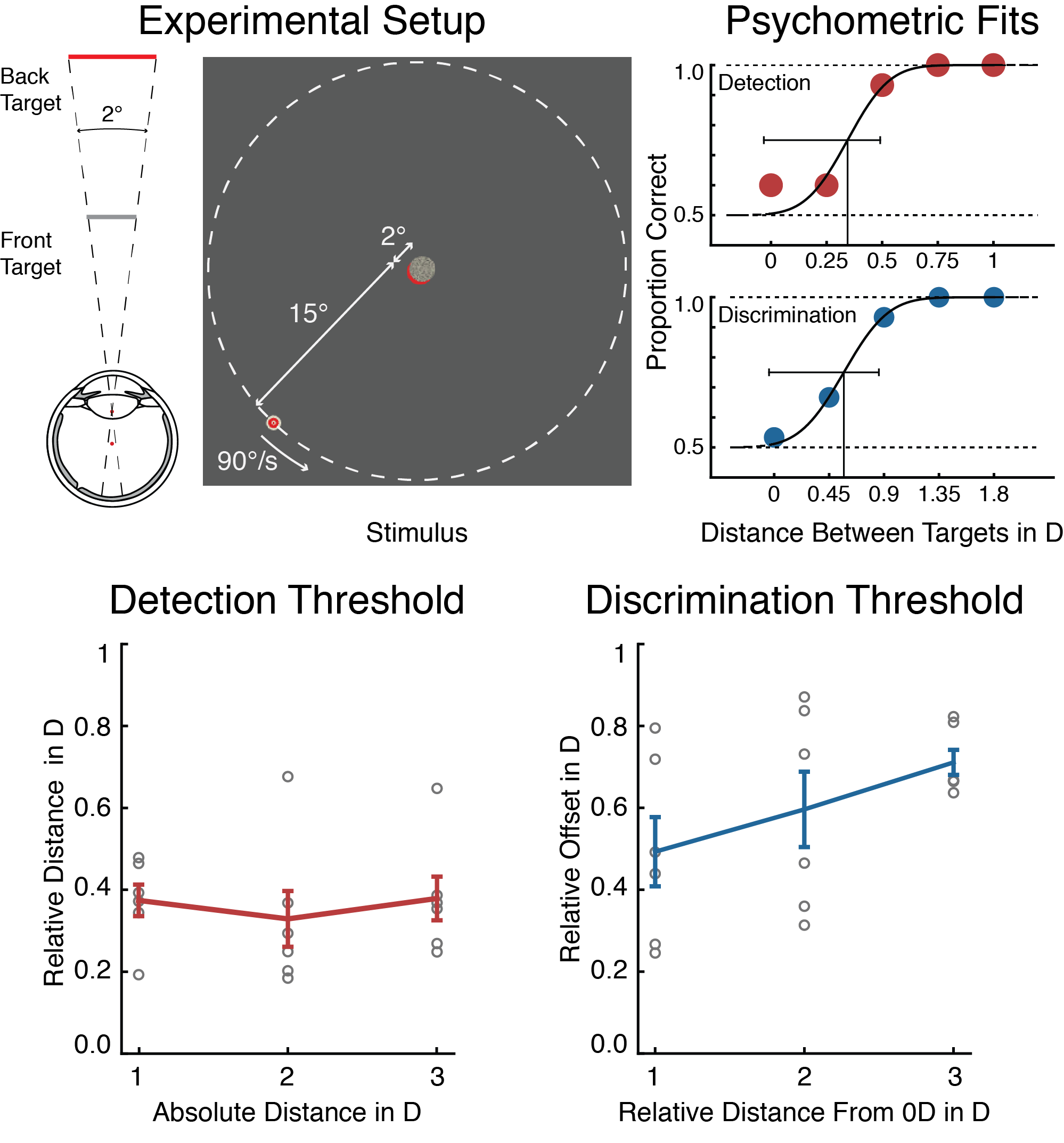

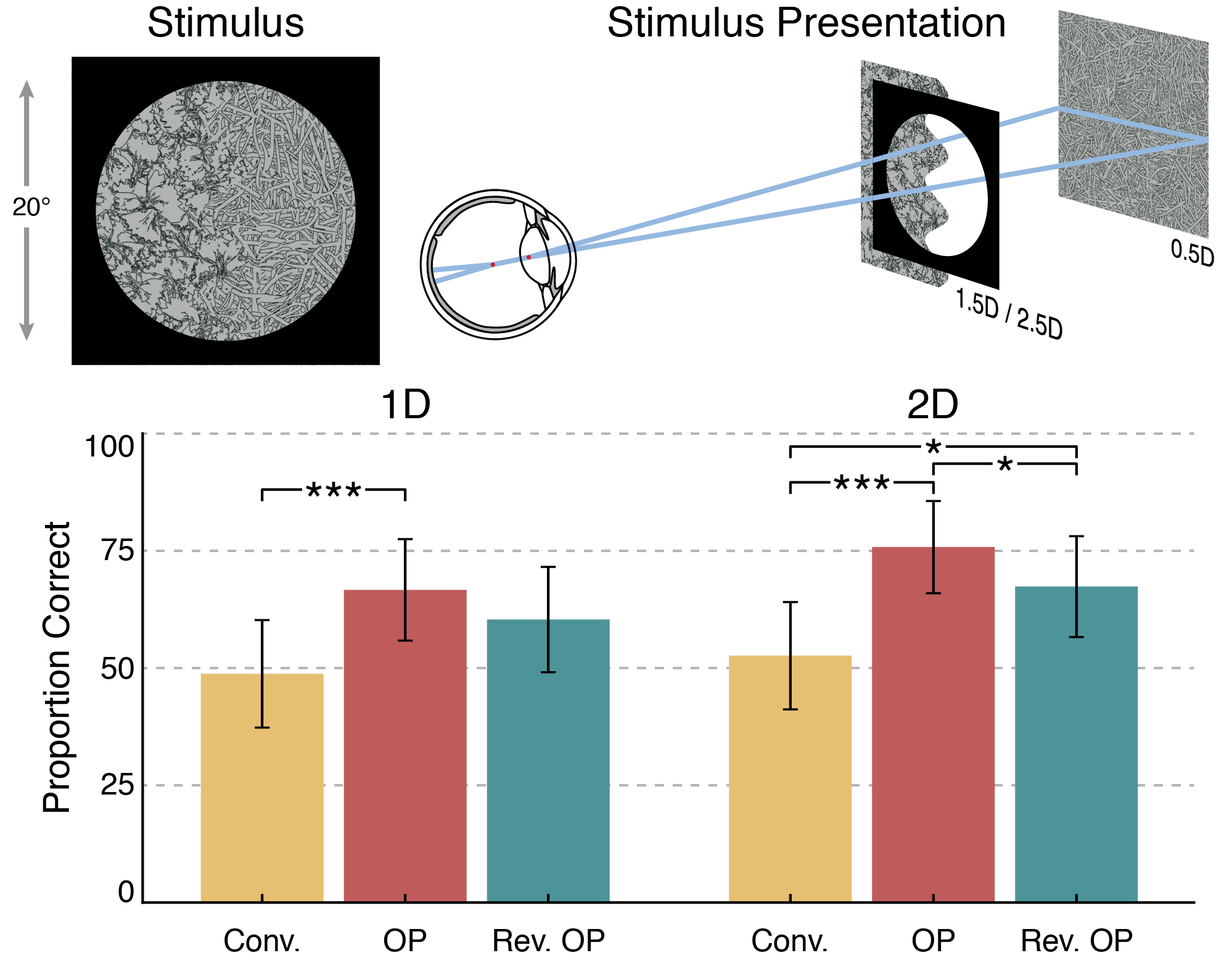

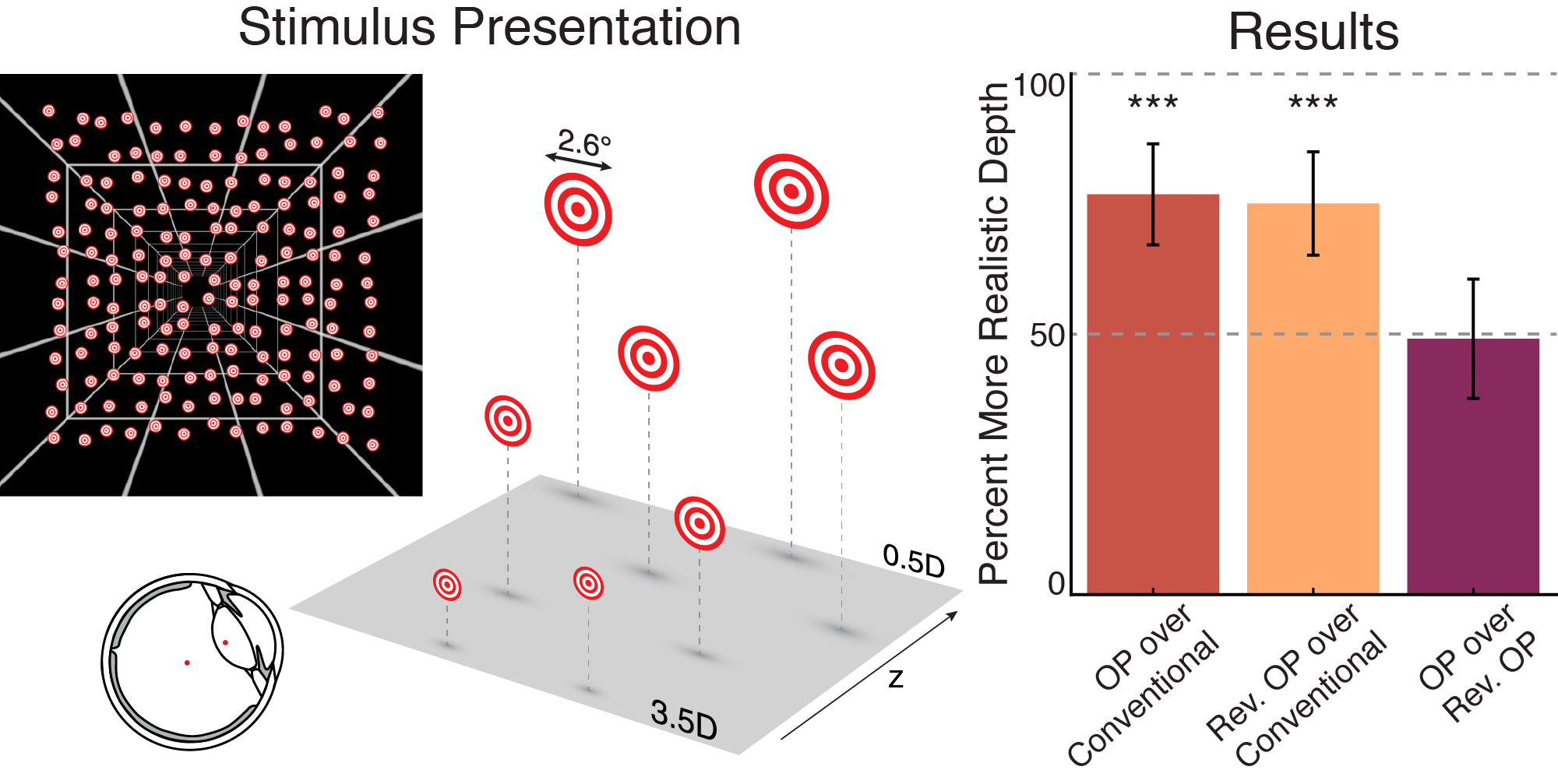

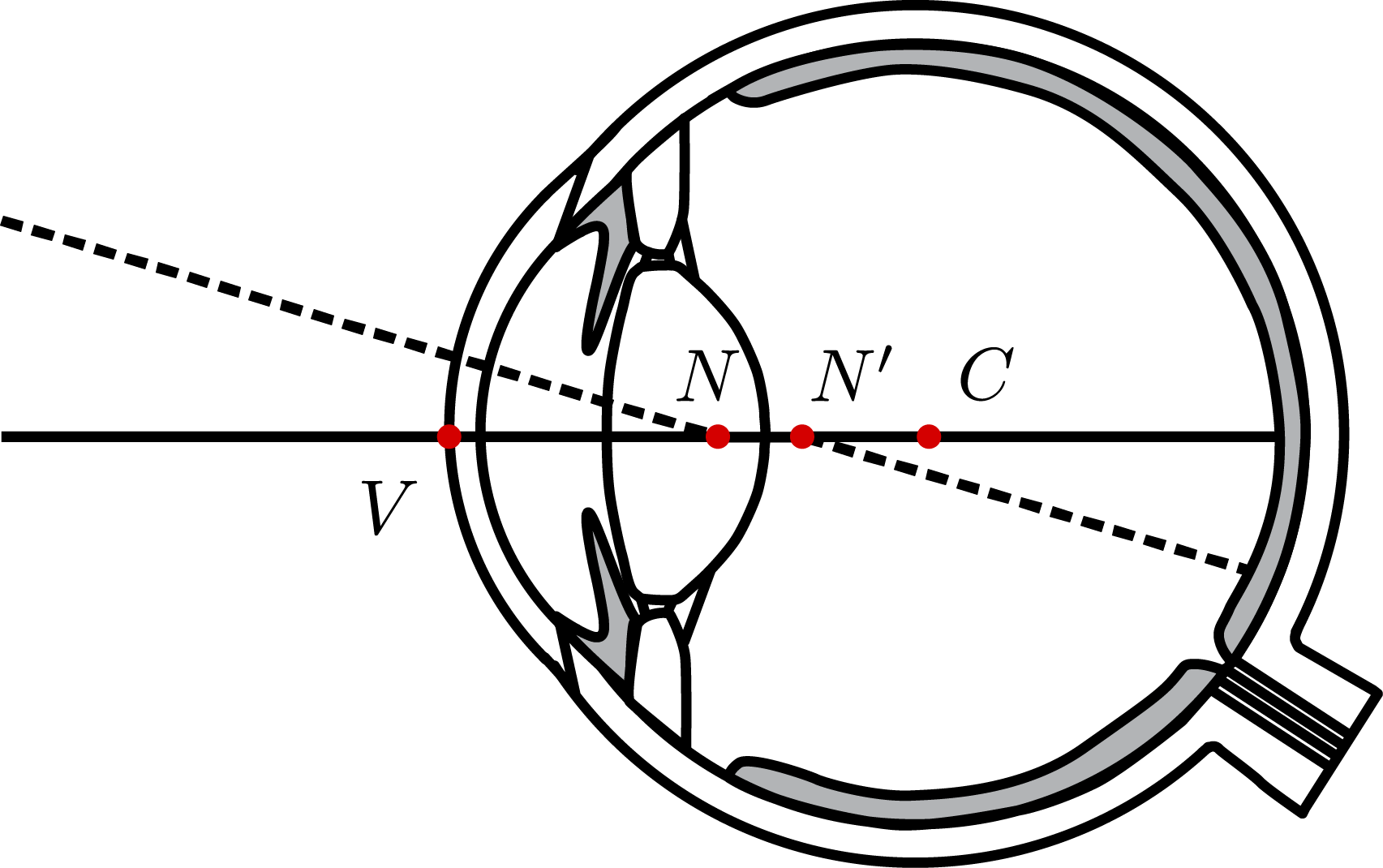

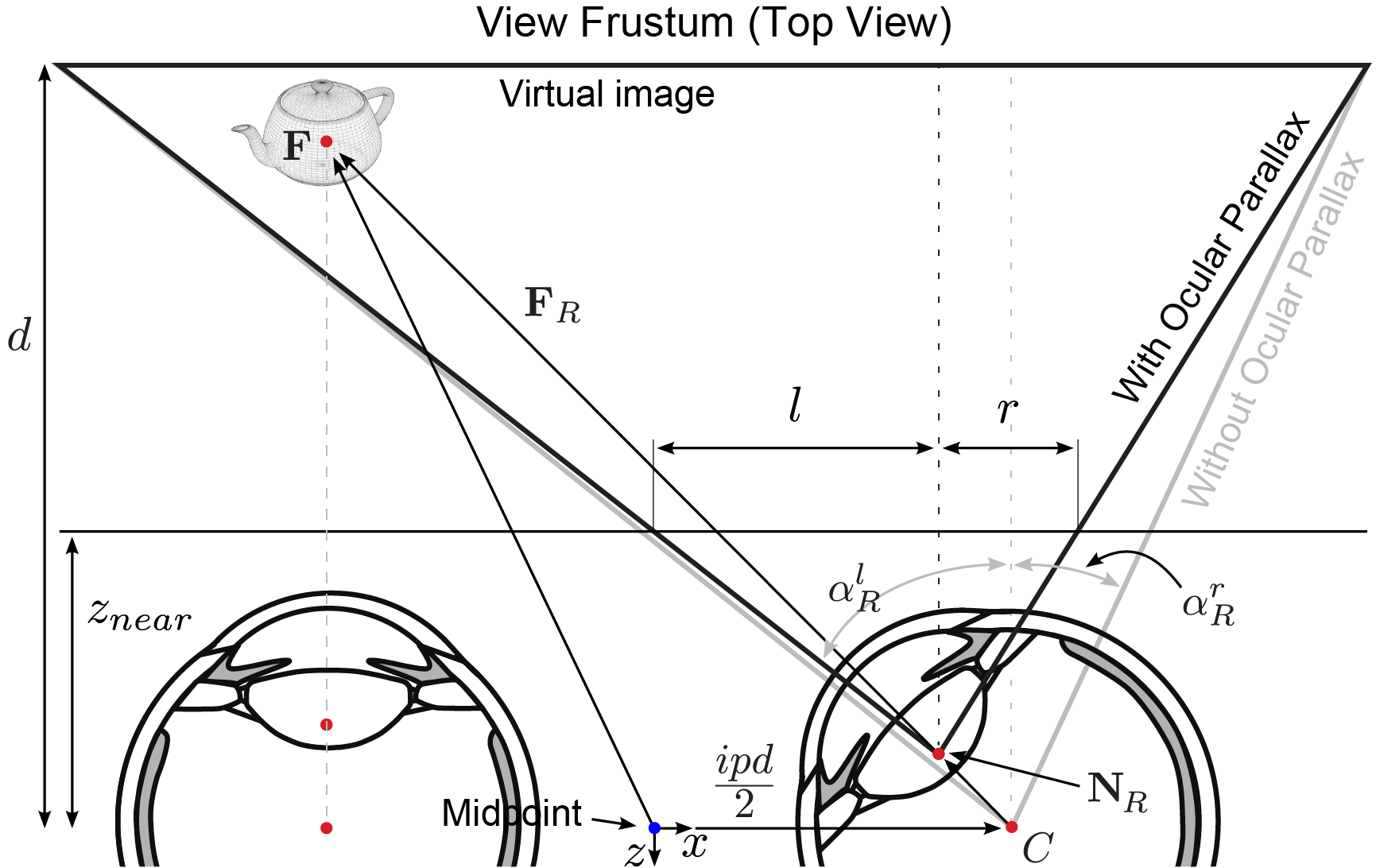

Immersive computer graphics systems strive to generate perceptually realistic user experiences. Current-generation virtual reality (VR) displays are successful in accurately rendering many perceptually important effects, including perspective, disparity, motion parallax, and other depth cues. In this paper we introduce ocular parallax rendering, a technology that accurately renders small amounts of gaze-contingent parallax capable of improving depth perception and realism in VR. Ocular parallax describes the small amounts of depth-dependent image shifts on the retina that are created as the eye rotates. The effect occurs because the centers of rotation and projection of the eye are not the same. We study the perceptual implications of ocular parallax rendering by designing and conducting a series of user experiments. Specifically, we estimate perceptual detection and discrimination thresholds for this effect and demonstrate that it is clearly visible in most VR applications. Additionally, we show that ocular parallax rendering provides an effective ordinal depth cue and it improves the impression of realistic depth in VR.

CITATION

R. Konrad, A. Angelopoulos, G. Wetzstein, “Gaze-Contingent Ocular Parallax Rendering for Virtual Reality”, in ACM Trans. Graph., 39 (2), 2020.

BibTeX

@article{Konrad:2019:OcularParallax,

author = {Konrad, Robert

and Angelopoulos, Anastasios

and Wetzstein, Gordon},

title = {Gaze-Contingent Ocular Parallax Rendering for Virtual Reality},

journal = {ACM Trans. Graph.},

volume = {39},

issue = {2},

year={2020}

}