ABSTRACT

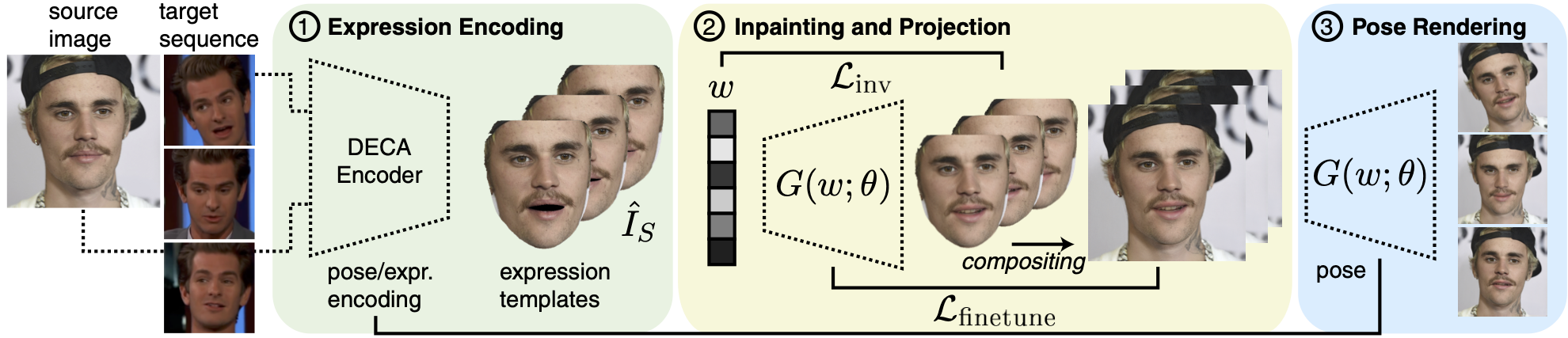

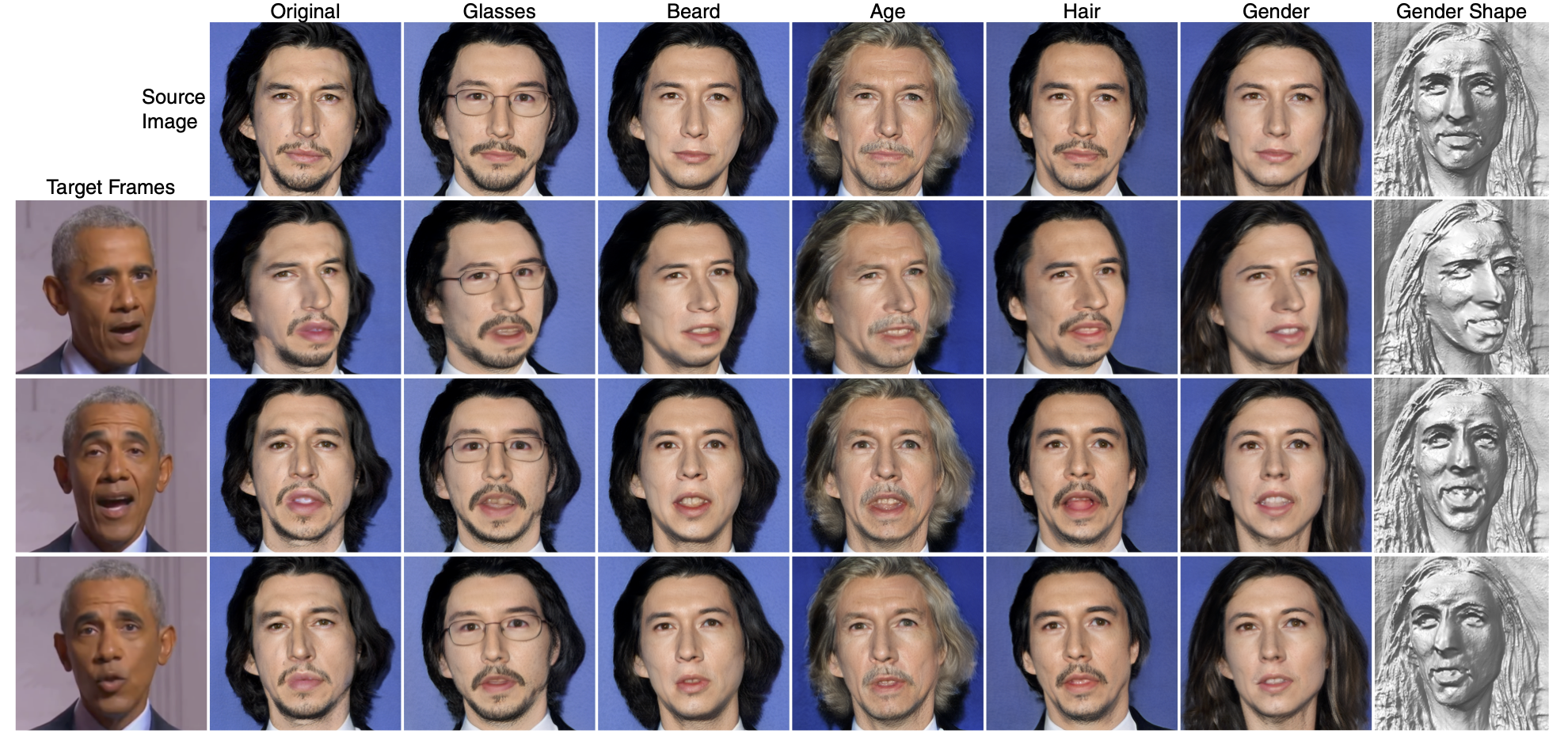

Millions of images of human faces are captured every single day; but these photographs portray the likeness of an individual with a fixed pose, expres- sion, and appearance. Portrait image animation enables the post-capture adjustment of these attributes from a single image while maintaining a pho- torealistic reconstruction of the subject’s likeness or identity. Still, current methods for portrait image animation are typically based on 2D warping operations or manipulations of a 2D generative adversarial network (GAN) and lack explicit mechanisms to enforce multi-view consistency. Thus these methods may significantly alter the identity of the subject, especially when the viewpoint relative to the camera is changed. In this work, we leverage newly developed 3D GANs, which allow explicit control over the pose of the image subject with multi-view consistency. We propose a supervision strat- egy to flexibly manipulate expressions with 3D morphable models, and we show that the proposed method also supports editing appearance attributes, such as age or hairstyle, by interpolating within the latent space of the GAN. The proposed technique for portrait image animation outperforms previous methods in terms of image quality, identity preservation, and pose transfer while also supporting attribute editing.