ABSTRACT

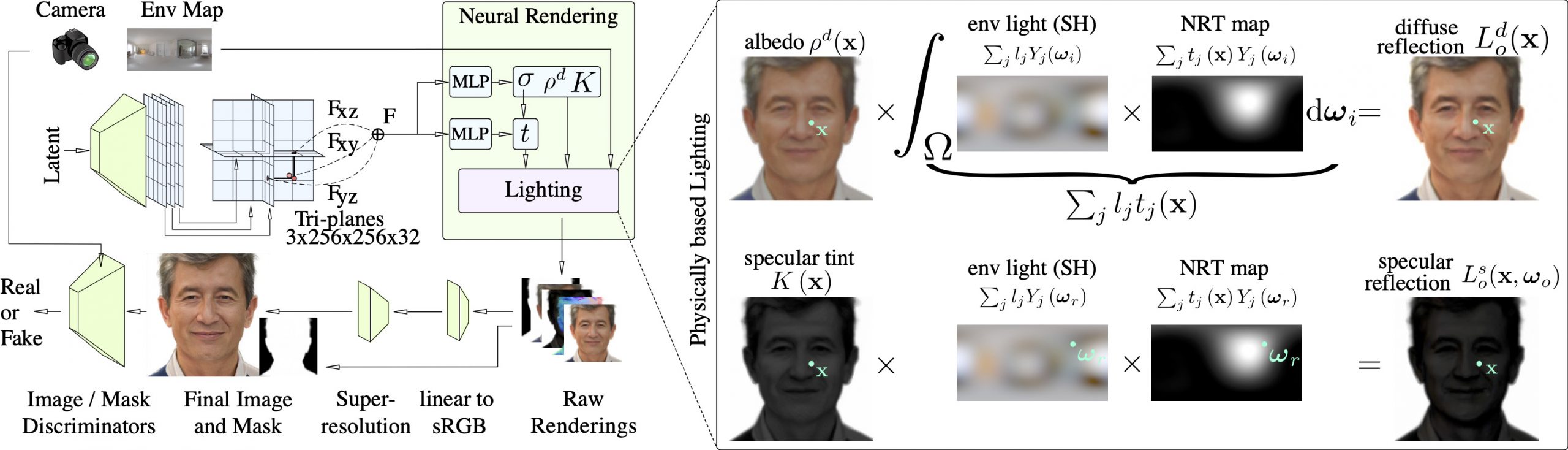

Unsupervised learning of 3D human faces from unstructured 2D image data is an active research area. While recent works have achieved an impressive level of photorealism, they commonly lack control of lighting, which prevents the generated assets from being deployed in novel environments. To this end, we introduce LumiGAN, an unconditional Generative Adversarial Network (GAN) for 3D human faces with a physically based lighting module that enables relighting under novel illumination at inference time. Unlike prior work, LumiGAN can create realistic shadow effects using an efficient visibility formulation that is learned in a self-supervised manner. LumiGAN generates plausible physical properties for relightable faces, including surface normals, diffuse albedo, and specular tint without any ground truth data. In addition to relightability, we demonstrate significantly improved geometry generation compared to state-of-the-art non-relightable 3D GANs and notably better photorealism than existing relightable GANs.