ABSTRACT

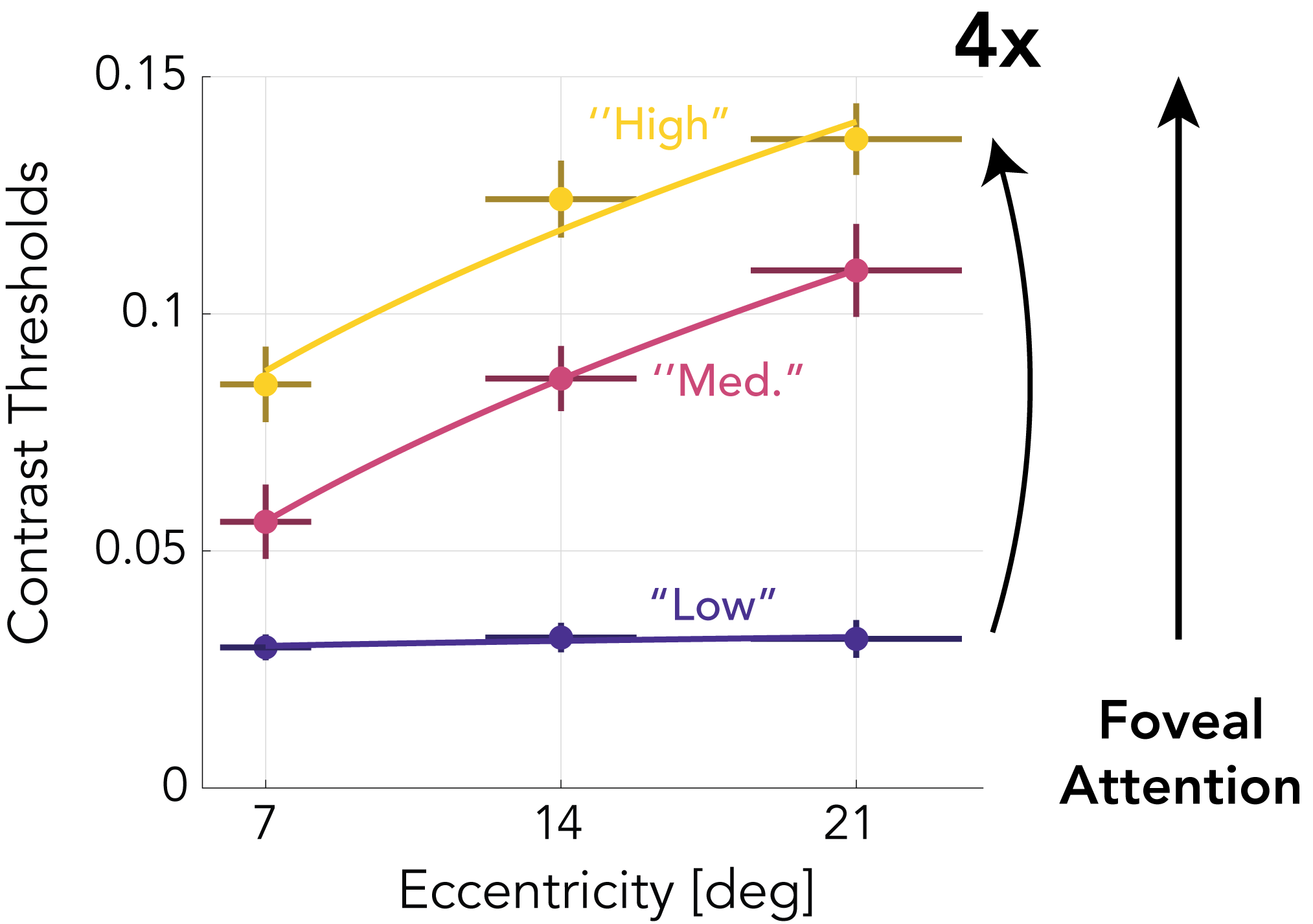

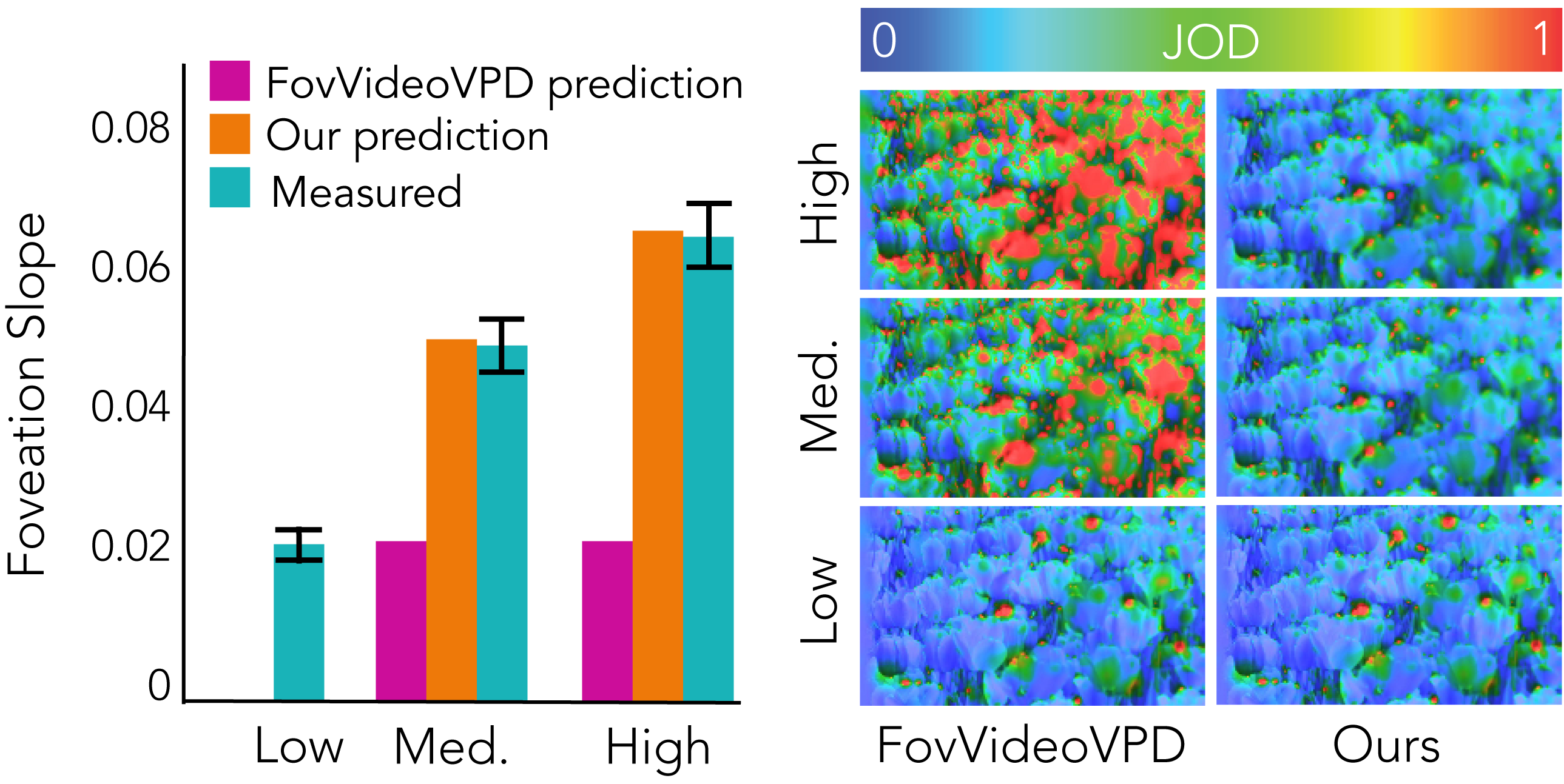

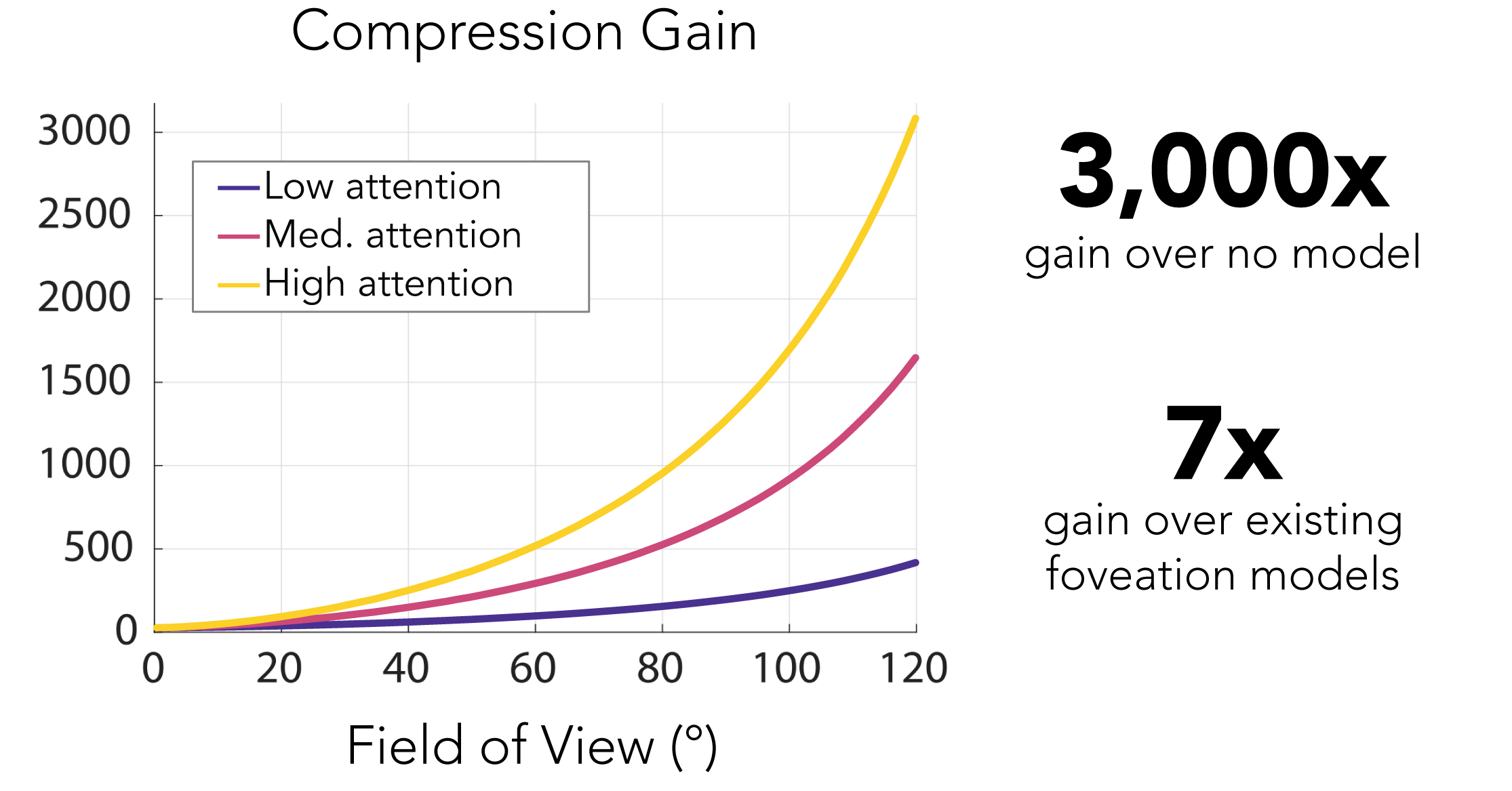

Foveated graphics is a promising approach to solving the bandwidth challenges of immersive virtual and augmented reality displays by exploiting the falloff in spatial acuity in the periphery of the visual field. However, the perceptual models used in these applications neglect the effects of higher-level cognitive processing, namely the allocation of visual attention, and are thus overestimating sensitivity in the periphery in many scenarios. Here, we introduce the first attention-aware model of contrast sensitivity. We conduct user studies to measure contrast sensitivity under different attention distributions and show that sensitivity in the periphery drops significantly when the user is required to allocate attention to the fovea. We motivate the development of future foveation models with another user study and demonstrate that tolerance for foveation in the periphery is significantly higher when the user is concentrating on a task in the fovea. Analysis of our model predicts significant bandwidth savings than those afforded by current models. As such, our work forms the foundation for attention-aware foveated graphics techniques.

FILES

- Technical Paper (arXiv link)

CITATION

B. Krajancich, P. Kellnhofer, G. Wetzstein, “Towards Attention-aware Foveated Rendering”, in ACM Trans. Graph., 40 (4), 2023.

BibTeX

@article{krajancich2023attention,

author = {Krajancich, Brooke

and Kellnhofer, Petr

and Wetzstein, Gordon},

title = {Towards Attention-aware Foveated Rendering},

journal = {ACM Trans. Graph.},

volume = {40},

issue = {4},

year={2023}

}