ABSTRACT

Typical camera optics consist of a system of individual elements that are designed to compensate for the aberrations of a single lens. Recent computational cameras shift some of this correction task from the optics to post-capture processing, reducing the imaging optics to only a few optical elements. However, these systems only achieve reasonable image quality by limiting the field of view (FOV) to a few degrees — effectively ignoring severe off-axis aberrations with blur sizes of multiple hundred pixels.

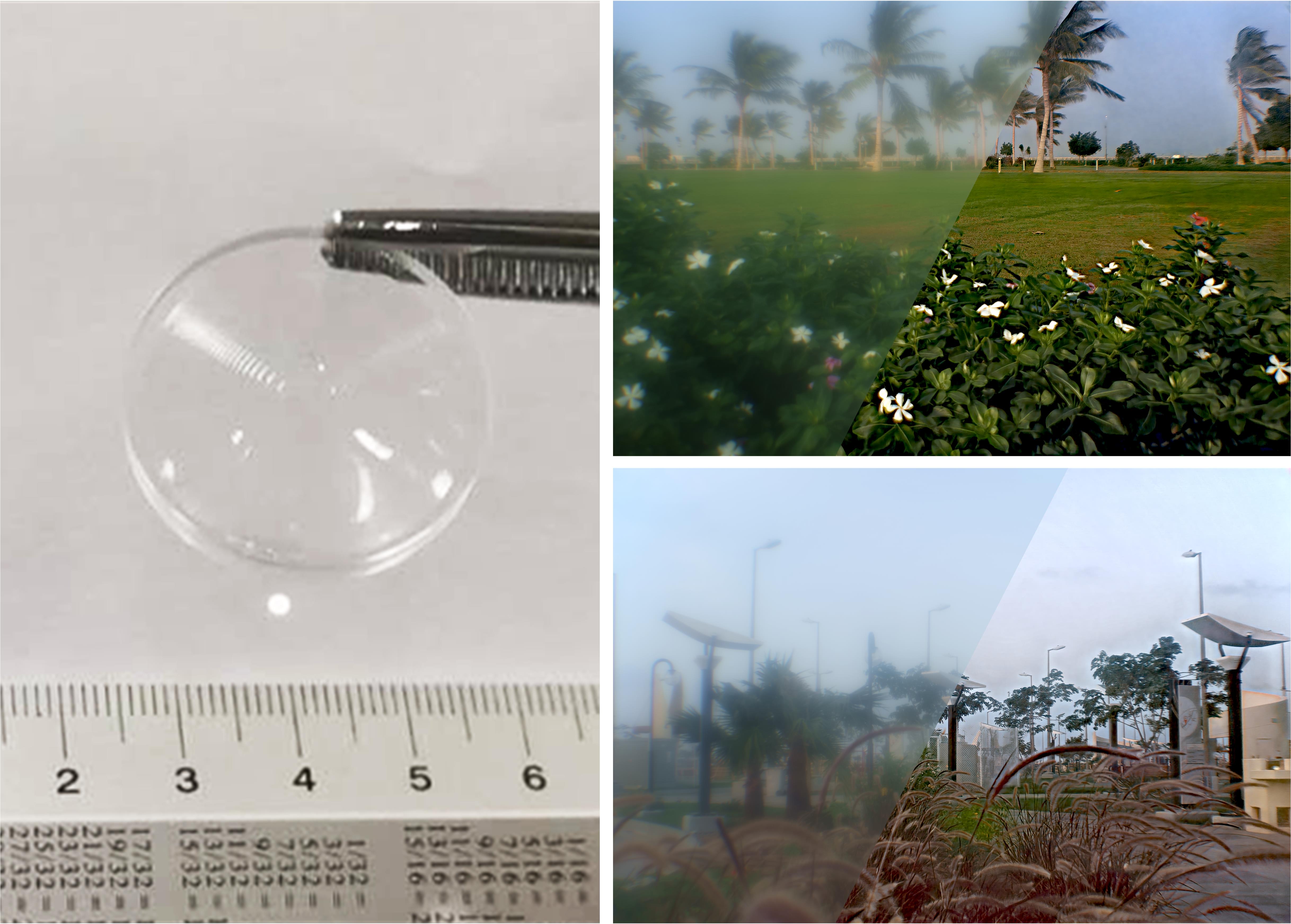

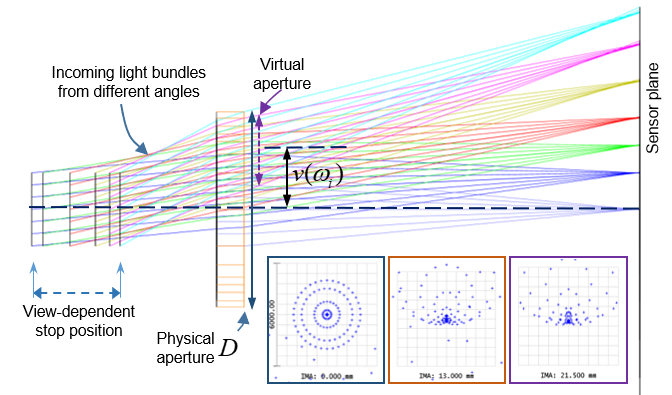

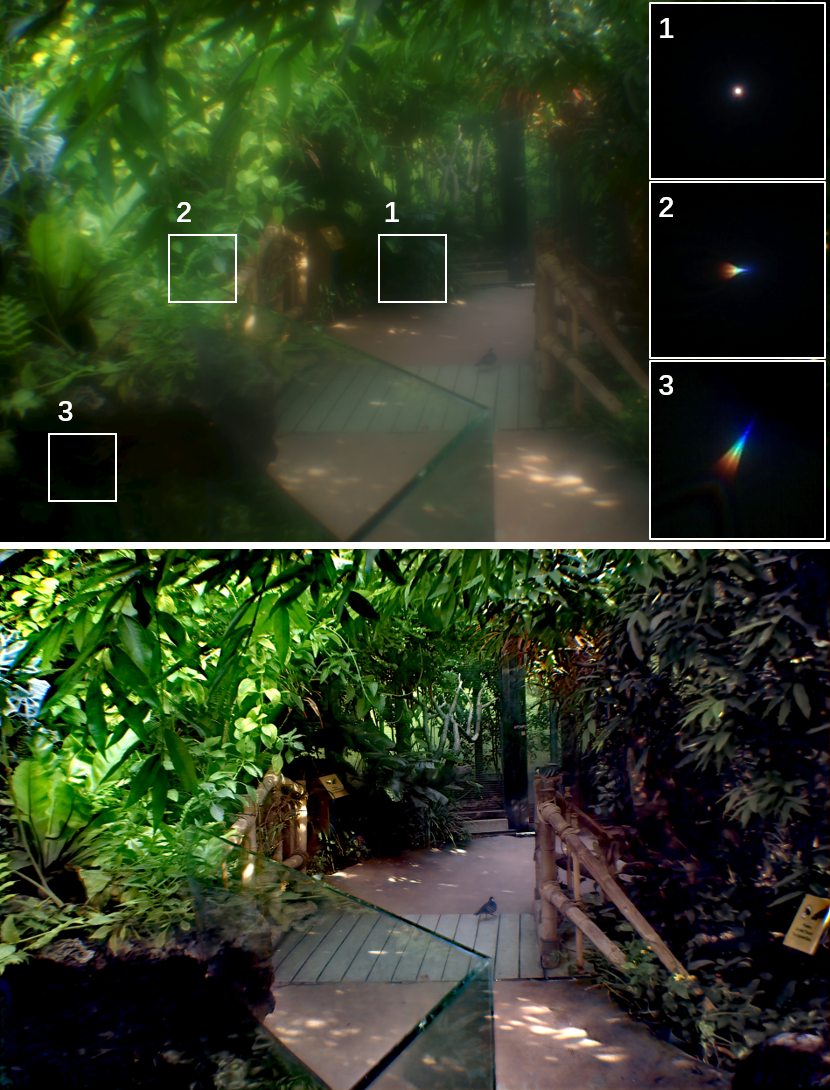

In this paper, we propose a lens design and learned reconstruction architecture that lift this limitation and provide an order of magnitude increase in field of view using only a single thin-plate lens element. Specifically, we design a lens to produce spatially shift-invariant point spread functions, over the full FOV, that are tailored to the proposed reconstruction architecture. We achieve this with a mixture PSF, consisting of a peak and and a low-pass component, which provides residual contrast instead of a small spot size as in traditional lens designs. To perform the reconstruction, we train a deep network on captured data from a display lab setup, eliminating the need for manual acquisition of training data in the field. We assess the proposed method in simulation and experimentally with a prototype camera system. We compare our system against existing single-element designs, including an aspherical lens and a pinhole, and we compare against a complex multi-element lens, validating high-quality large field-of-view (i.e. 53-deg) imaging performance using only a single thin-plate element.