ABSTRACT

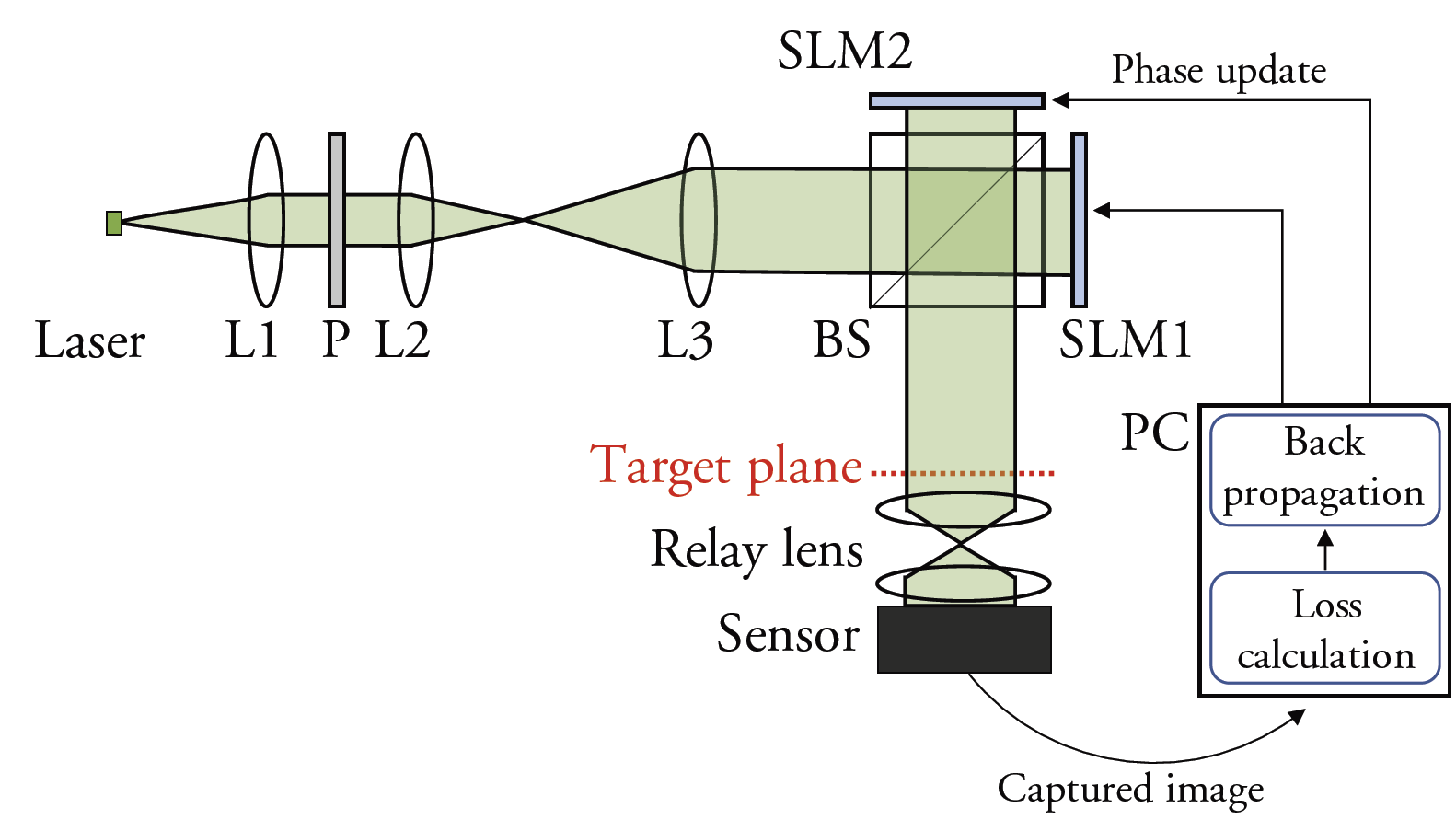

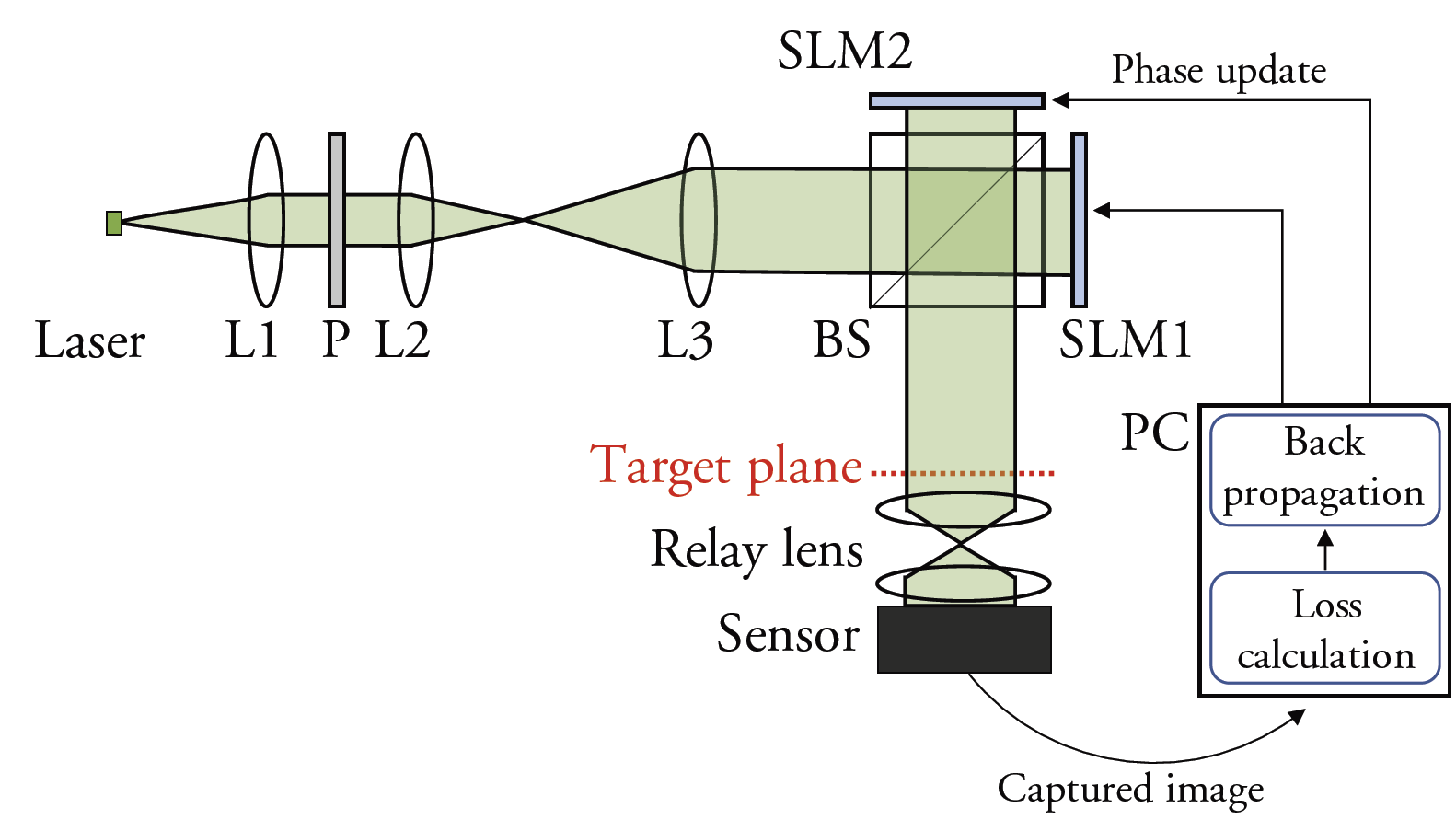

We introduce Michelson holography (MH), a holographic display technology that optimizes image quality for emerging holographic near-eye displays. Using two spatial light modulators (SLMs), MH is capable of leveraging destructive interference to optically cancel out undiffracted light corrupting the observed image. We calibrate this system using emerging camera-in-the-loop holography techniques and demonstrate state-of-the-art 2D and multi-plane holographic image quality.

FILES

-

- Technical Paper and Supplement (link)

CITATION

S. Choi, J. Kim, Y. Peng, G. Wetzstein, Optimizing image quality for holographic near-eye displays with Michelson Holography, OSA Optica, 2021.

BibTeX

@article{Choi:2021:MichelsonHolography,

author = {S. Choi and J. Kim and Y. Peng and G. Wetzstein},

title = {{Optimizing Image Quality for Holographic Near-eye Displays with Michelson Holography}},

journal = {OSA Optica},

year = {2021},

}

|

| Holographic display setup schematic. Our display uses a fiber-coupled RGB laser module, collimating optics, two liquid crystal on silicon (LCoS) spatial light modulators, and a machine vision camera. |

Press Coverage

-

- OSA Optica News (link)

- Communications of ACM TechNews (link)

- Physics.org News (link)

- Sci Tech Daily (link)

- Photonics Media (link)

- Brinkwire Technology (link)

- EurekAlert News (link)

- Knowledia News (link)