ADDITIONAL MATERIAL

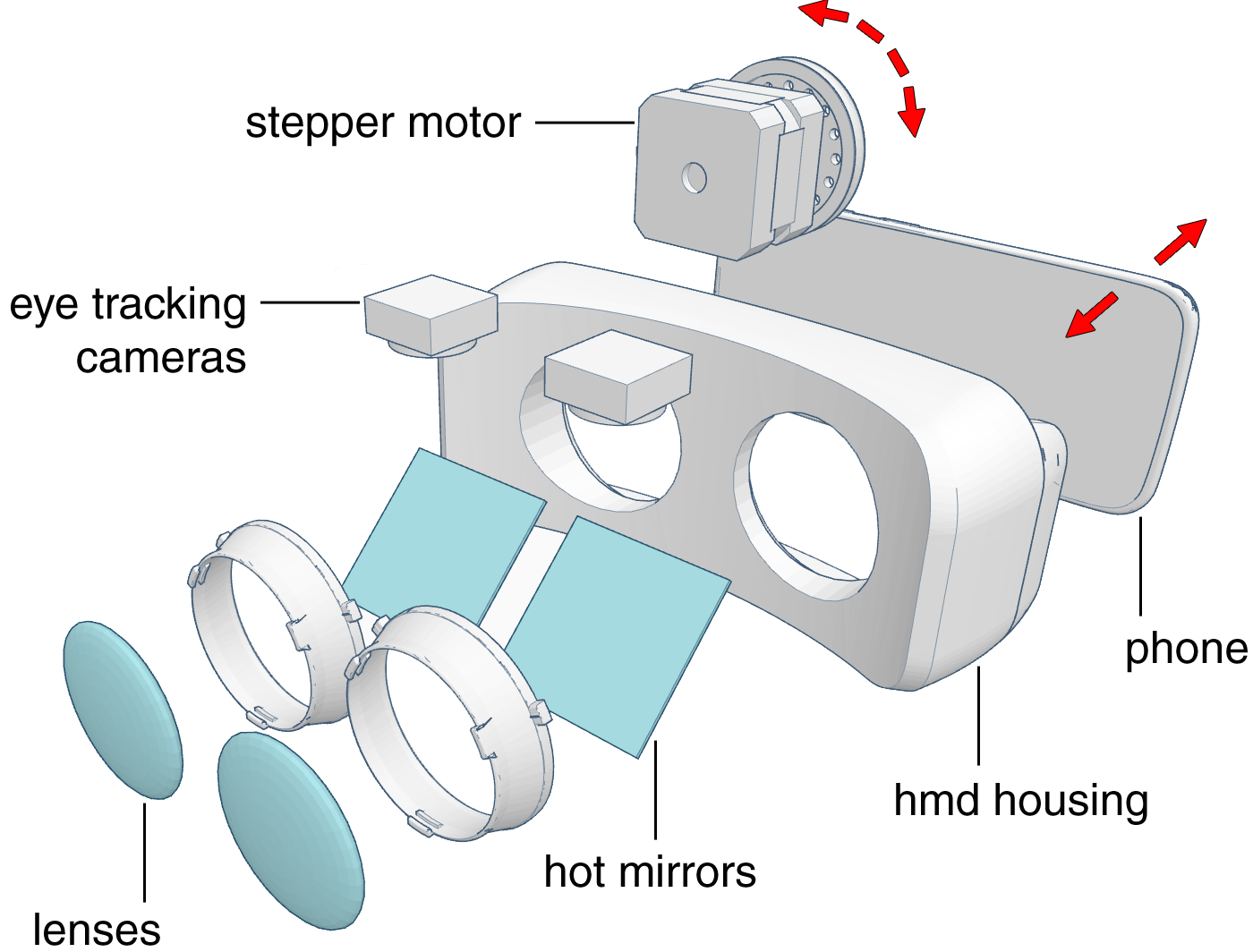

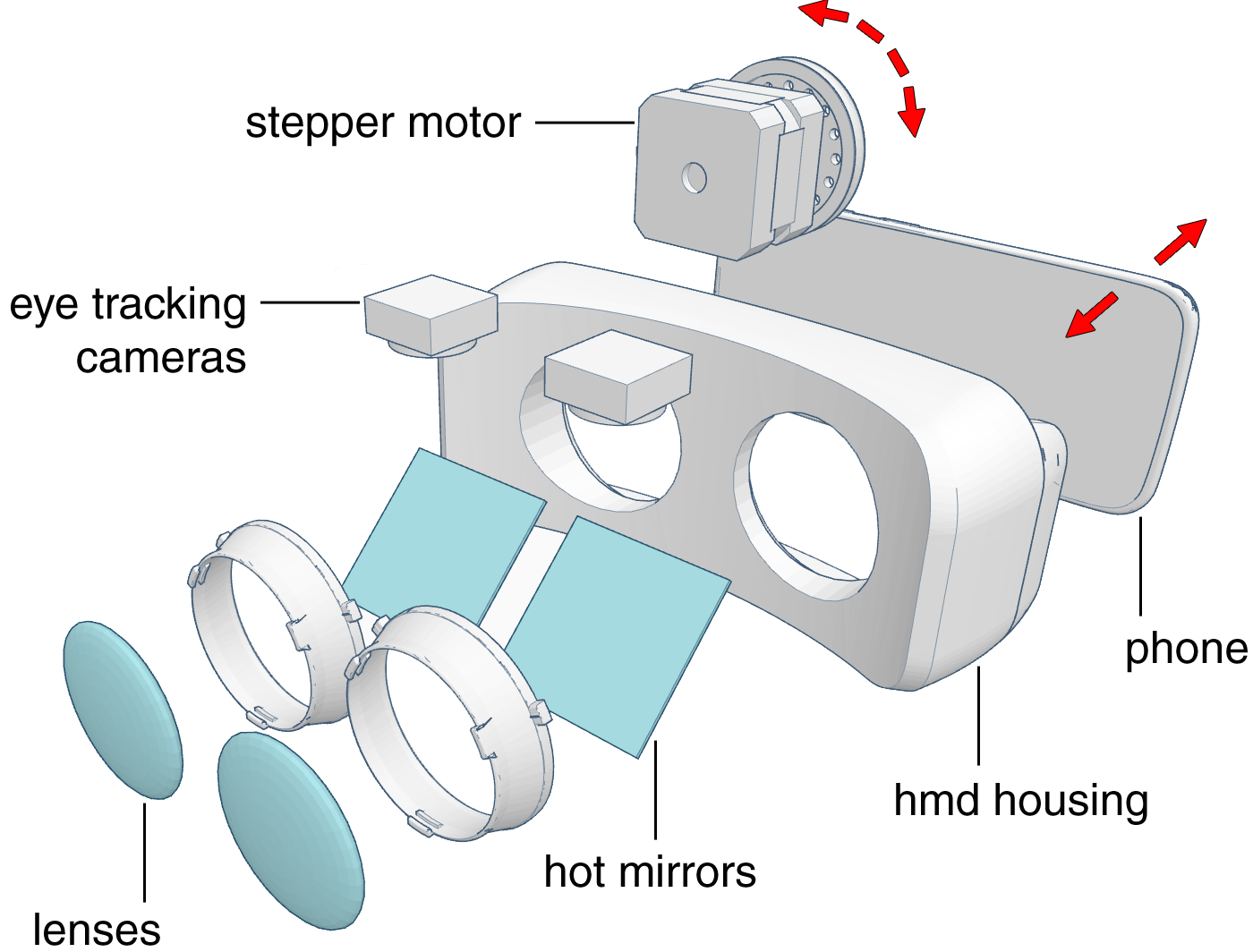

A schematic showing the basic components of a wearable gaze-contingent prototype. The stepper motor mounted on top rotates based on position reported from the integrated stereoscopic eye tracker and software, moving the phone back and forth and thereby dynamically adjusting the focal plane of the display (red arrows).

Photographs of our wearable gaze-contingent display prototype. A conventional near-eye display (Samsung Gear VR) is augmented by a stereoscopic gaze tracker and a motor that is capable of adjusting the physical distance between screen and lenses.

Only a few mm of physical display displacement results in a large change of the perceived virtual image. Overall system latency, including rendering, data transmission, and motor adjustments, are approx. 280 ms for a sweep from 4 D (25 cm) to 0 D (optical infinity) and 160 ms for a sweep from from 3 D (33 cm) to 1 D (1 m). This latency is the in same order as the response time of the human accommodative system, which is a few hundred ms.

Only a few mm of physical display displacement results in a large change of the perceived virtual image. Overall system latency, including rendering, data transmission, and motor adjustments, are approx. 280 ms for a sweep from 4 D (25 cm) to 0 D (optical infinity) and 160 ms for a sweep from from 3 D (33 cm) to 1 D (1 m). This latency is the in same order as the response time of the human accommodative system, which is a few hundred ms.

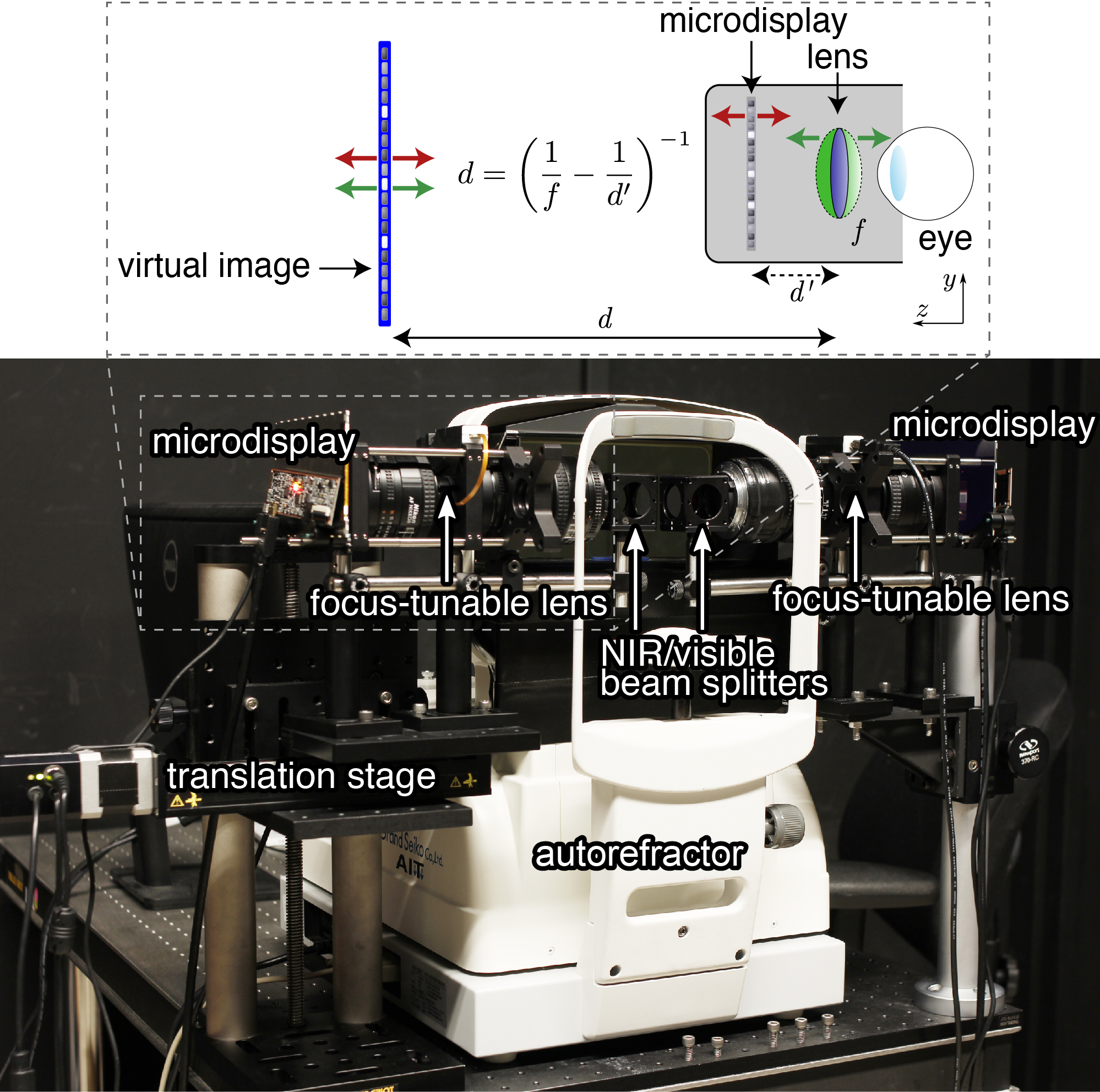

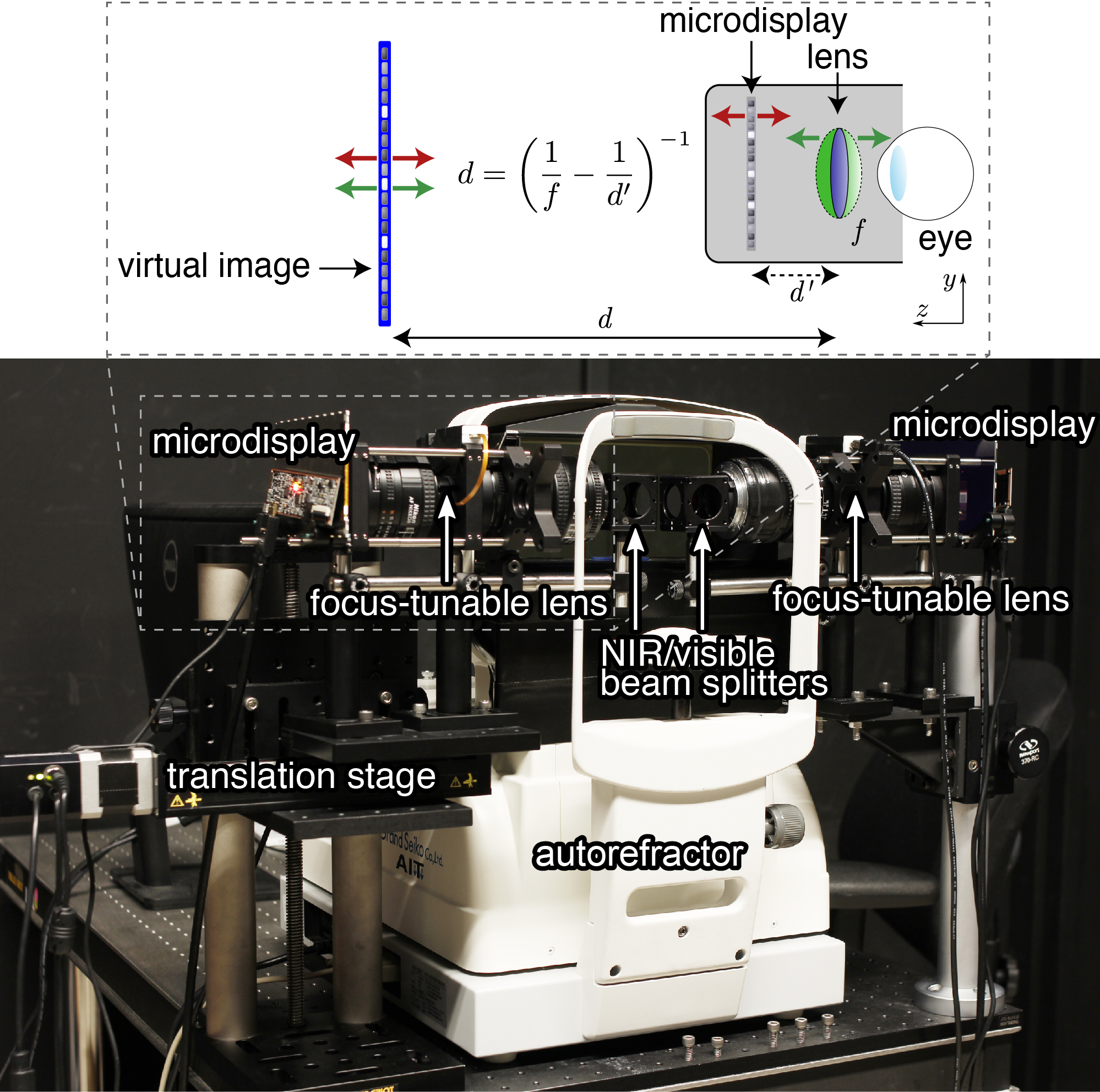

(top) A typical near-eye display uses a fixed focus lens to show a magnified virtual image of a microdisplay to each eye. The focal length of the lens, f, and the distance to the microdisplay, d’, determine the distance of the virtual image, d. Adaptive focus can be implemented using either a focus-tunable lens (green arrows) or a fixed focus lens and a mechanically actuated display (red arrows), so that the virtual image can be moved to different distances. (bottom) A benchtop setup designed to incorporate adaptive focus via focus-tunable lenses and an autorefractor to record accommodation. A translation stage adjusts intereye separation, and NIR/visible light beam splitters allow for simultaneous stimulus presentation and accommodation measurement.

(top) A typical near-eye display uses a fixed focus lens to show a magnified virtual image of a microdisplay to each eye. The focal length of the lens, f, and the distance to the microdisplay, d’, determine the distance of the virtual image, d. Adaptive focus can be implemented using either a focus-tunable lens (green arrows) or a fixed focus lens and a mechanically actuated display (red arrows), so that the virtual image can be moved to different distances. (bottom) A benchtop setup designed to incorporate adaptive focus via focus-tunable lenses and an autorefractor to record accommodation. A translation stage adjusts intereye separation, and NIR/visible light beam splitters allow for simultaneous stimulus presentation and accommodation measurement.

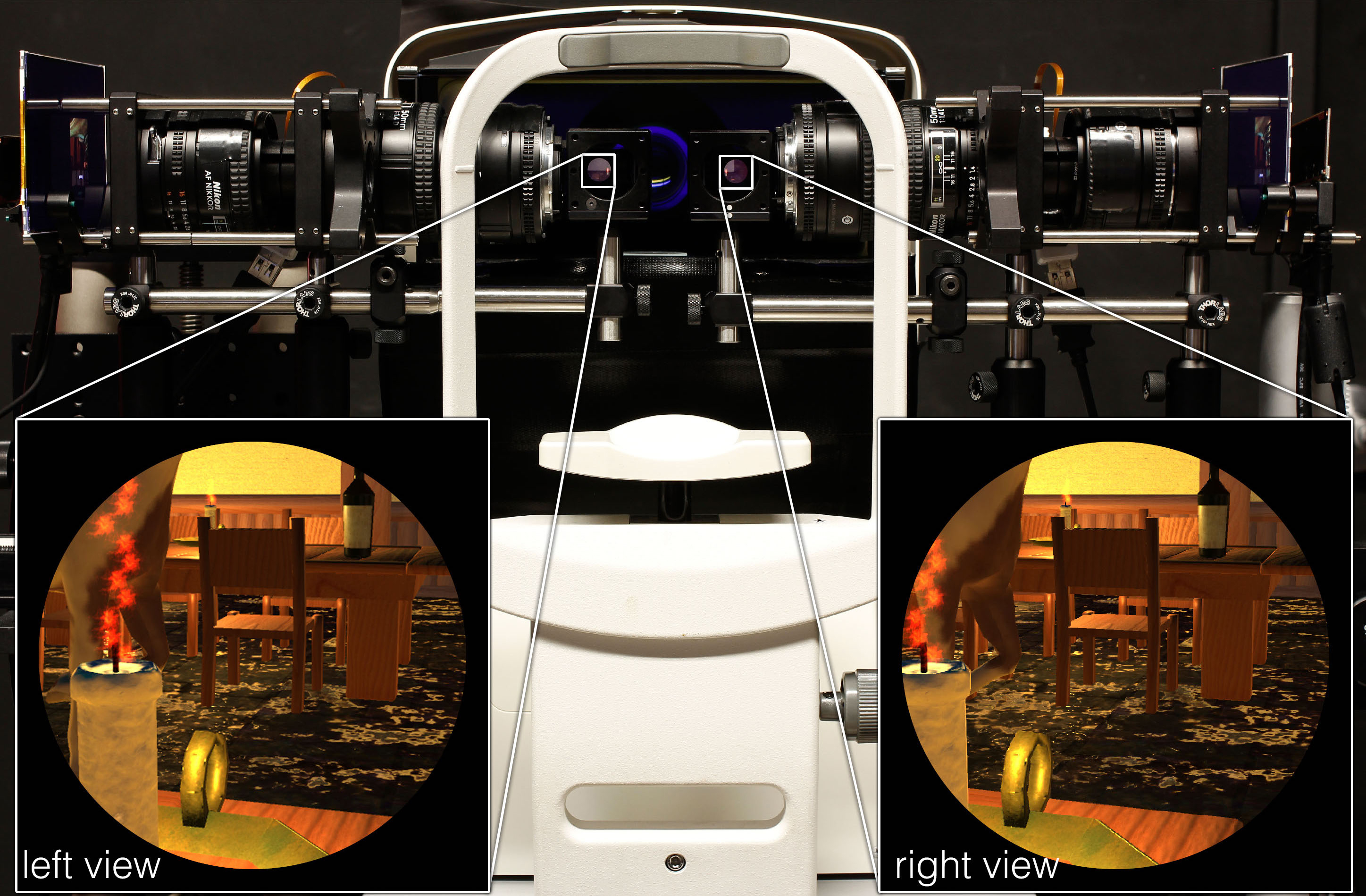

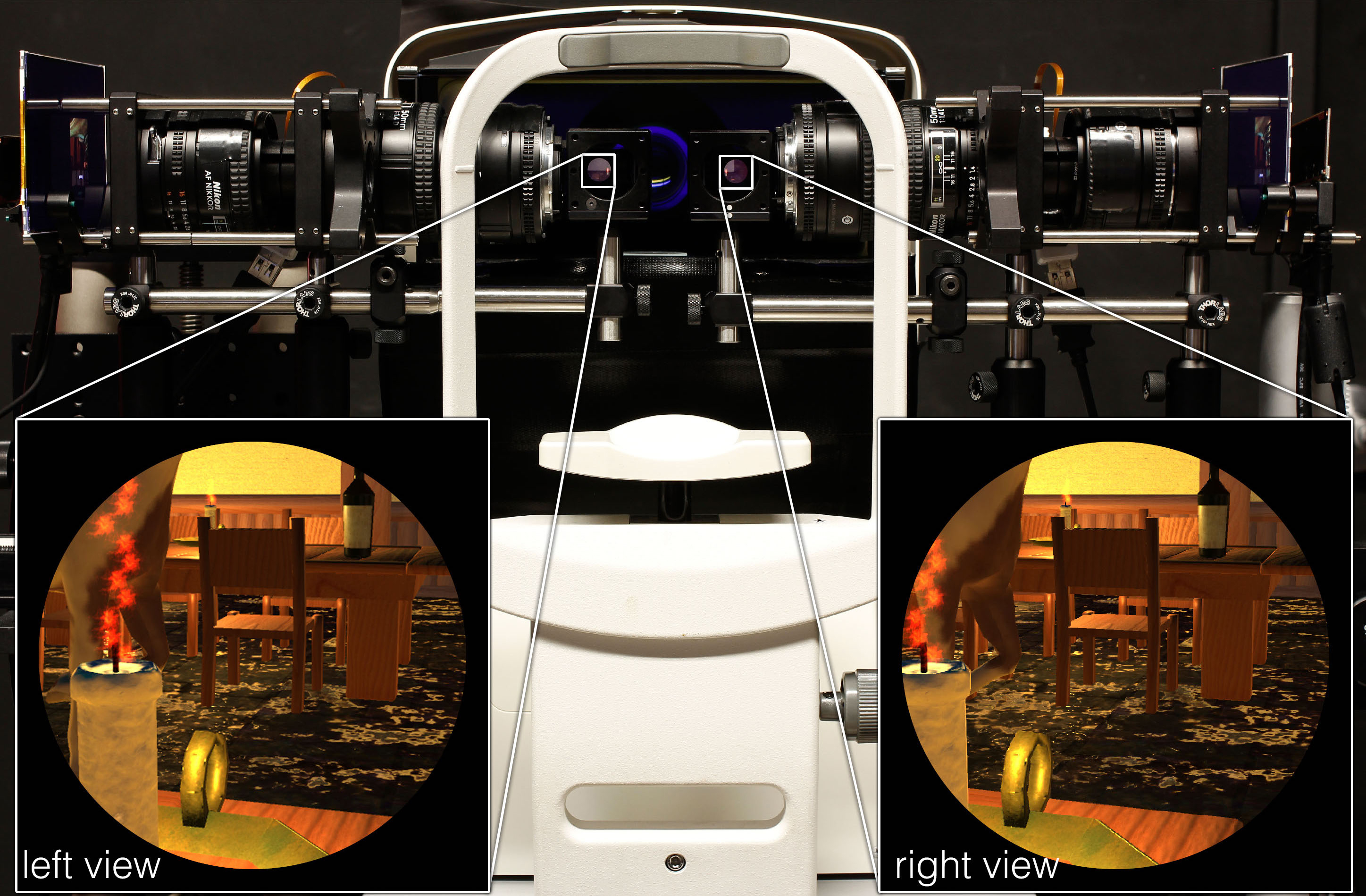

Prototype stereoscopic near-eye display with focus-tunable lenses and adjustable interpupillary distance via a translation stage. The systems includes an autorefractor that is capable of recording the accommodative state of the user’s right eye continuously at 4–5 Hz. Insets show example stereoscopic views.

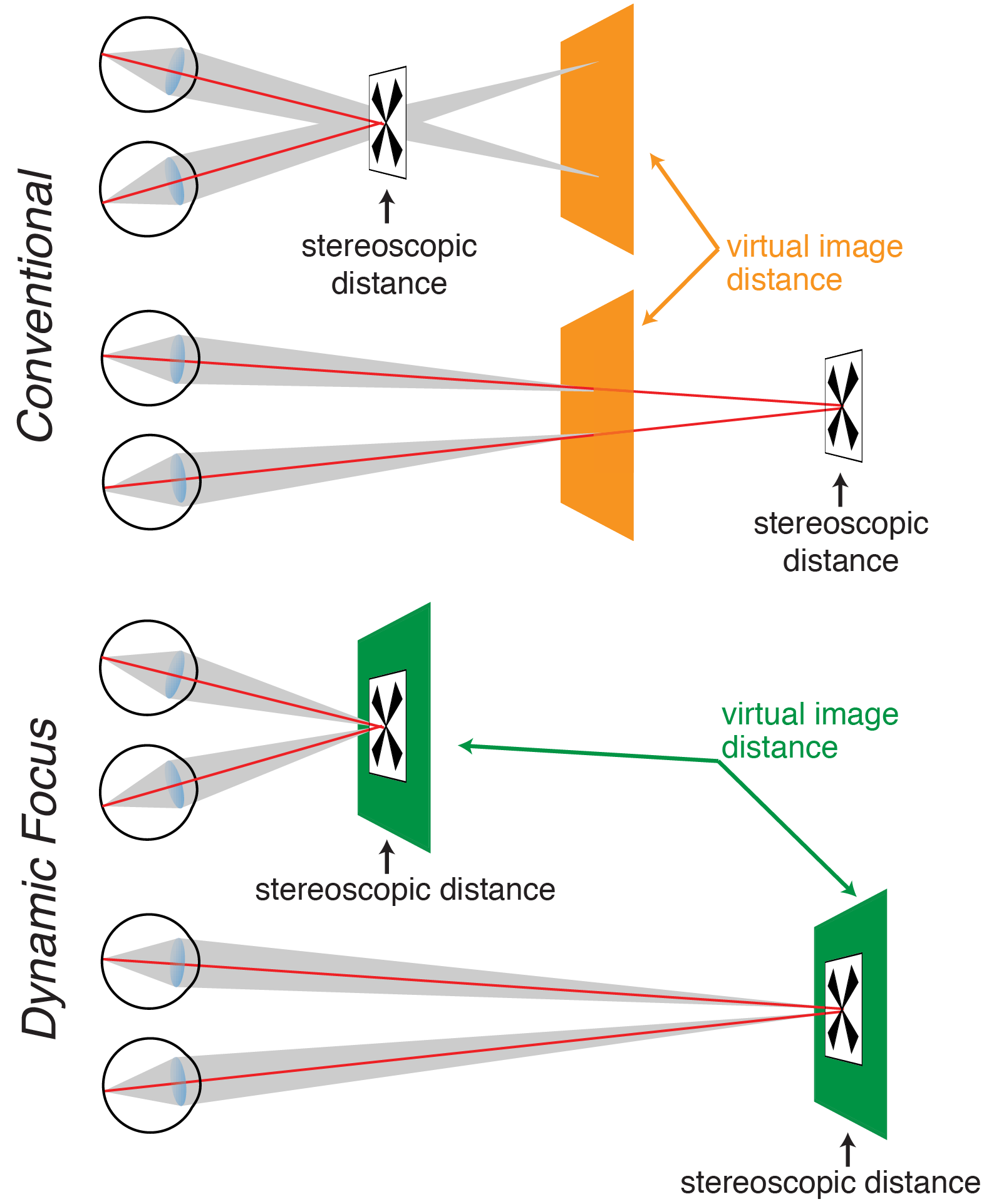

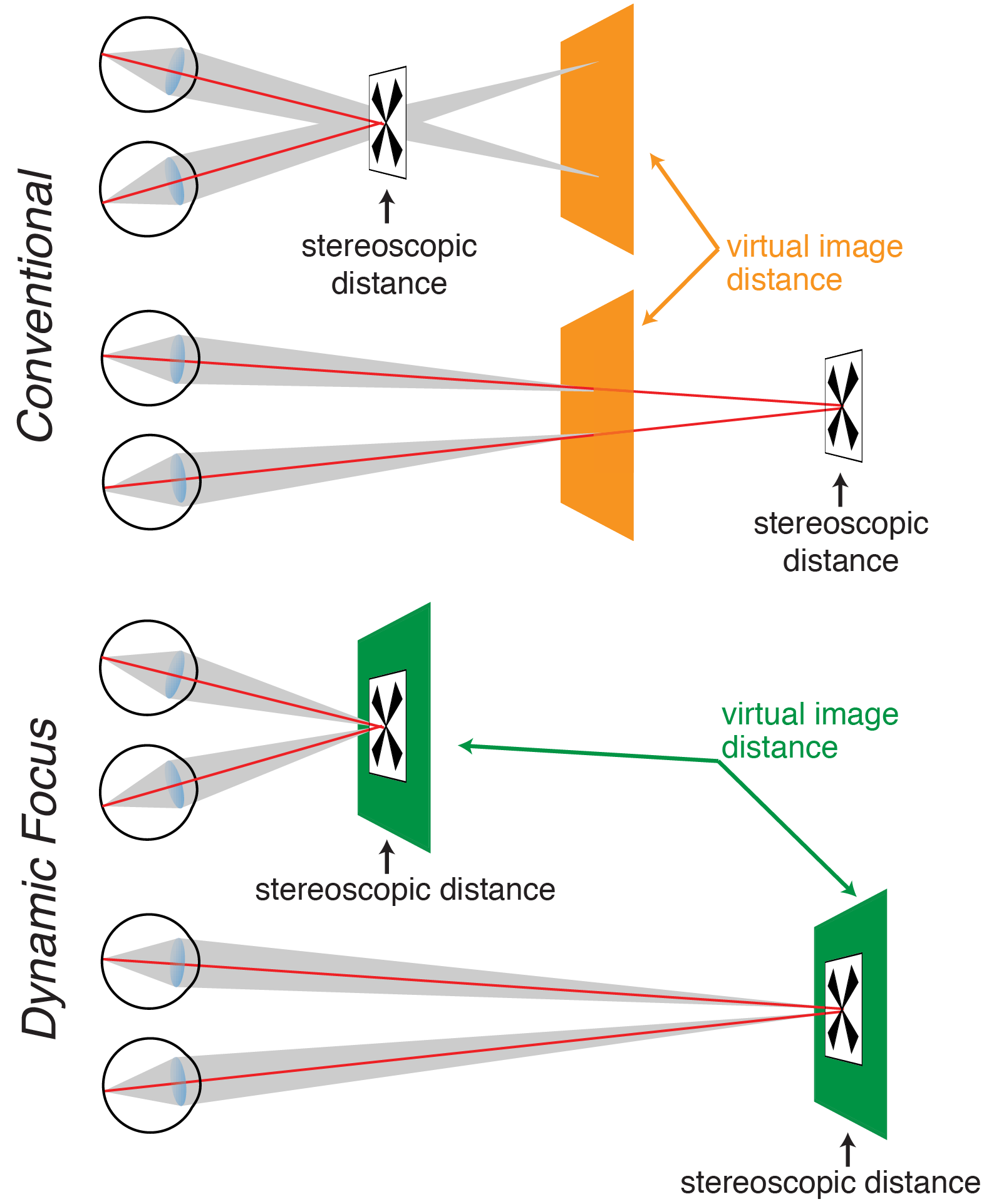

(top) The use of a fixed focus lens in conventional near-eye displays means that the magnified virtual image appears at a constant distance (orange planes). However, by presenting different images to the two eyes, objects can be simulated at arbitrary stereoscopic distances. To experience clear and single vision in VR, the user’s eyes have to rotate to verge at the correct stereoscopic distance (red lines), but the eyes must maintain accommodation at the virtual image distance (gray areas). (bottom) In a dynamic focus display, the virtual image distance (green planes) is constantly updated to match the stereoscopic distance of the target. Thus, the vergence and accommodation distances can be matched.

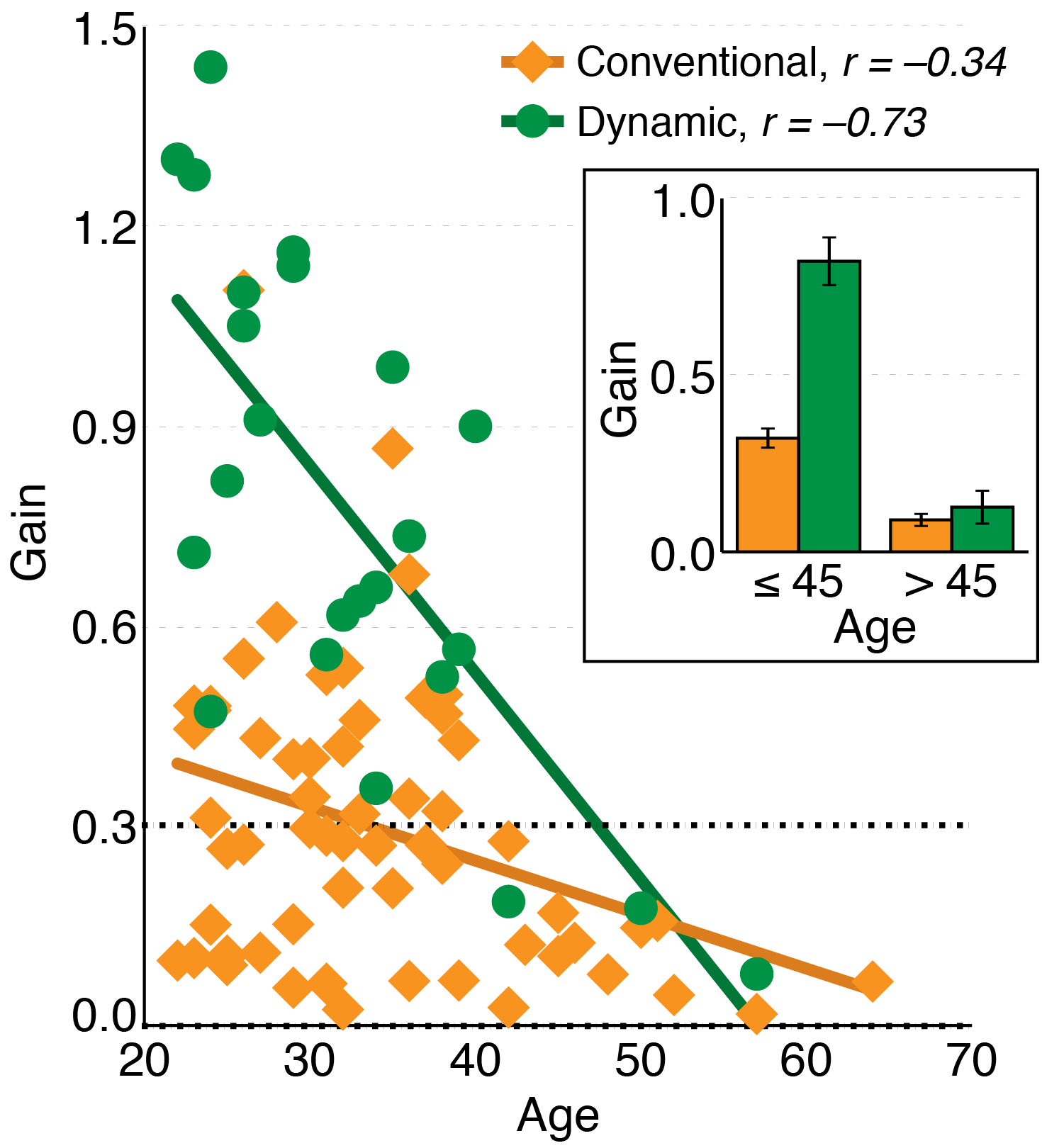

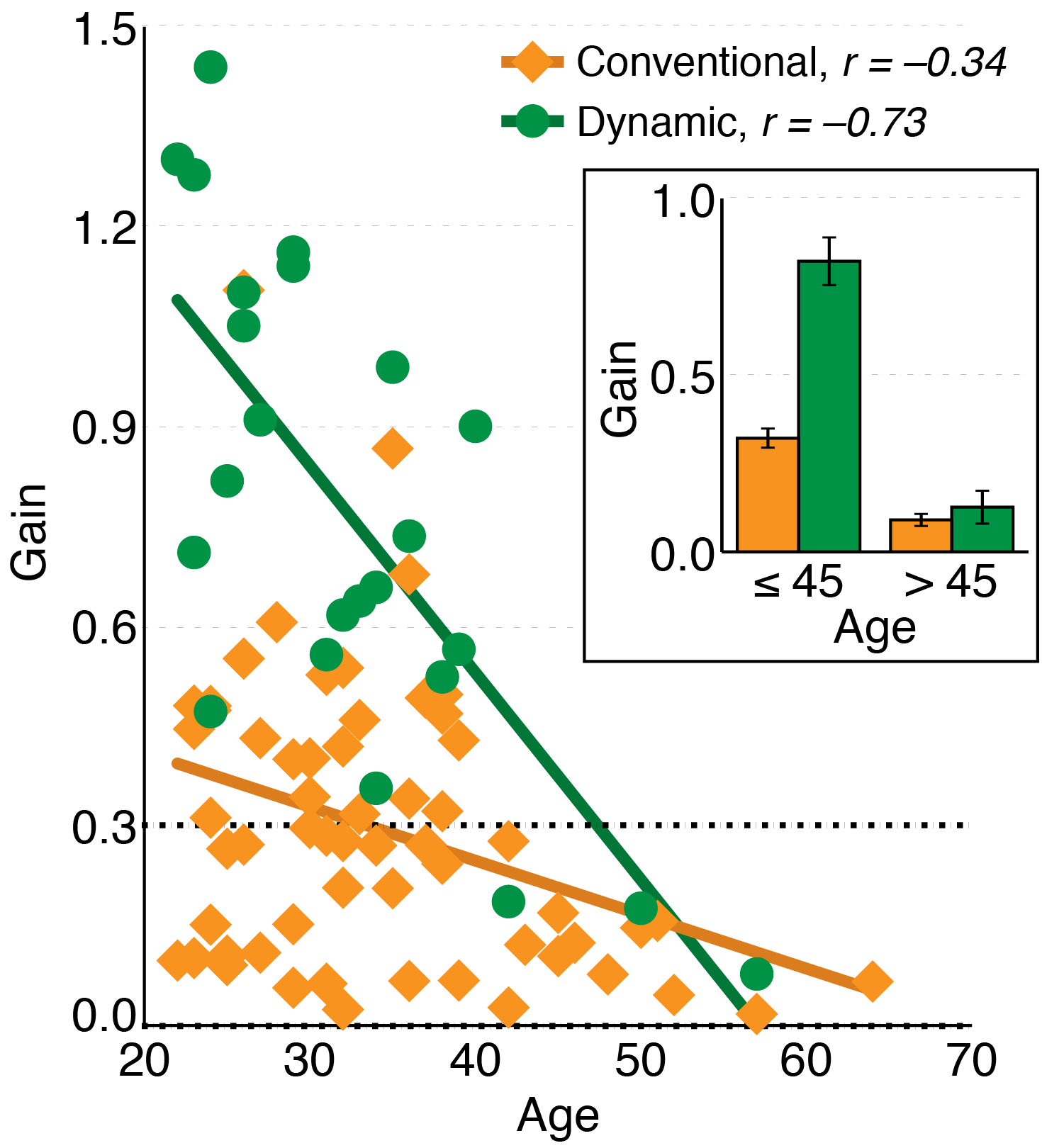

Accommodative responses were recorded under conventional and dynamic display modes while users watched a target move sinusoidally in depth. The accommodative gains plotted against the user’s age show a clear downward trend with age and a higher response in the dynamic condition. Inset shows means and SEs of the gains for users grouped into younger and older cohorts relative to 45 y old.

Related Projects

You may also be interested in related projects from our group on perceptual aspects of near-eye displays:

- R. Konrad et al. “Gaze-contingent Ocular Parallax Rendering for Virtual Reality”, ACM Transactions on Graphics 2020 (link)

- B. Krajancich et al. “Optimizing Depth Perception in Virtual and Augmented Reality through Gaze-contingent Stereo Rendering”, ACM SIGGRAPH Asia 2020 (link)

- N. Padmanaban et al. “Optimizing virtual reality for all users through gaze-contingent and adaptive focus displays”, PNAS 2017 (link)

and other next-generation near-eye display and wearable technology:

- Y. Peng et al. “Neural Holography with Camera-in-the-loop Training”, ACM SIGGRAPH 2020 (link)

- B. Krajancich et al. “Factored Occlusion: Single Spatial Light Modulator Occlusion-capable Optical See-through Augmented Reality Display”, IEEE TVCG, 2020 (link)

- N. Padmanaban et al. “Autofocals: Evaluating Gaze-Contingent Eyeglasses for Presbyopes”, Science Advances 2019 (link)

- K. Rathinavel et al. “Varifocal Occlusion-Capable Optical See-through Augmented Reality Display based on Focus-tunable Optics”, IEEE TVCG 2019 (link)

- R. Konrad et al. “Accommodation-invariant Computational Near-eye Displays”, ACM SIGGRAPH 2017 (link)

- R. Konrad et al. “Novel Optical Configurations for Virtual Reality: Evaluating User Preference and Performance with Focus-tunable and Monovision Near-eye Displays”, ACM SIGCHI 2016 (link)

- F.C. Huang et al. “The Light Field Stereoscope: Immersive Computer Graphics via Factored Near-Eye Light Field Display with Focus Cues”, ACM SIGGRAPH 2015 (link)