Abstract

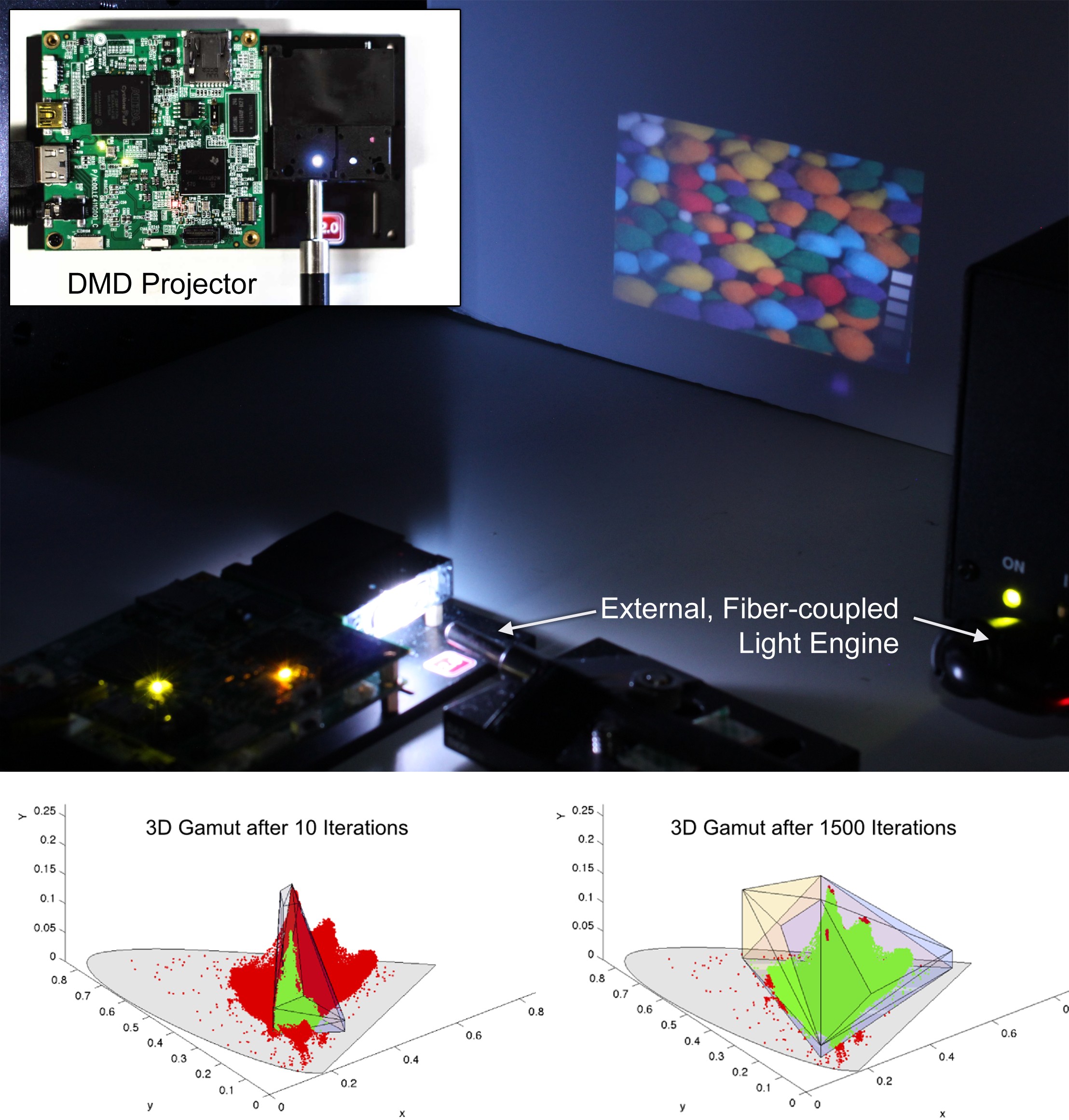

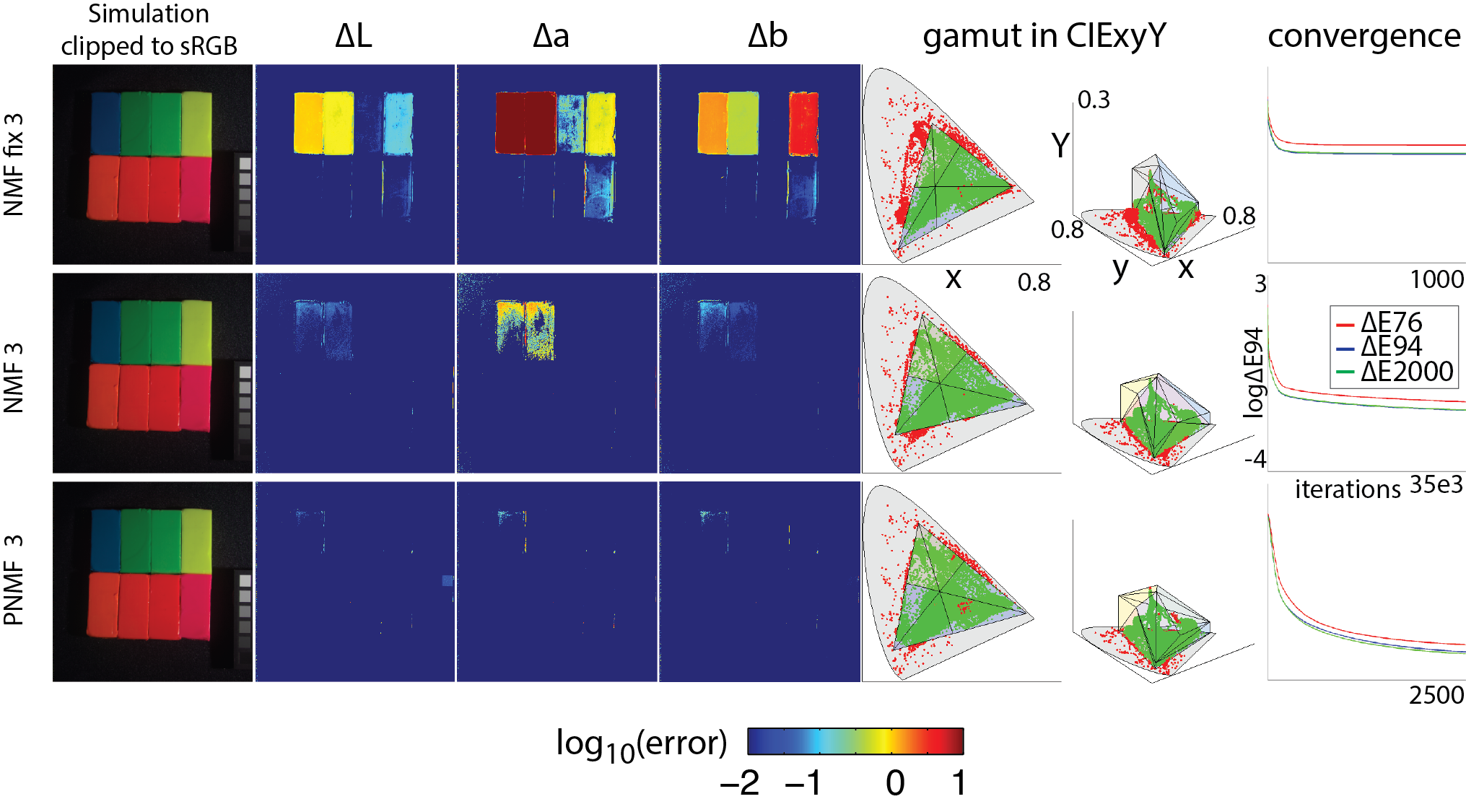

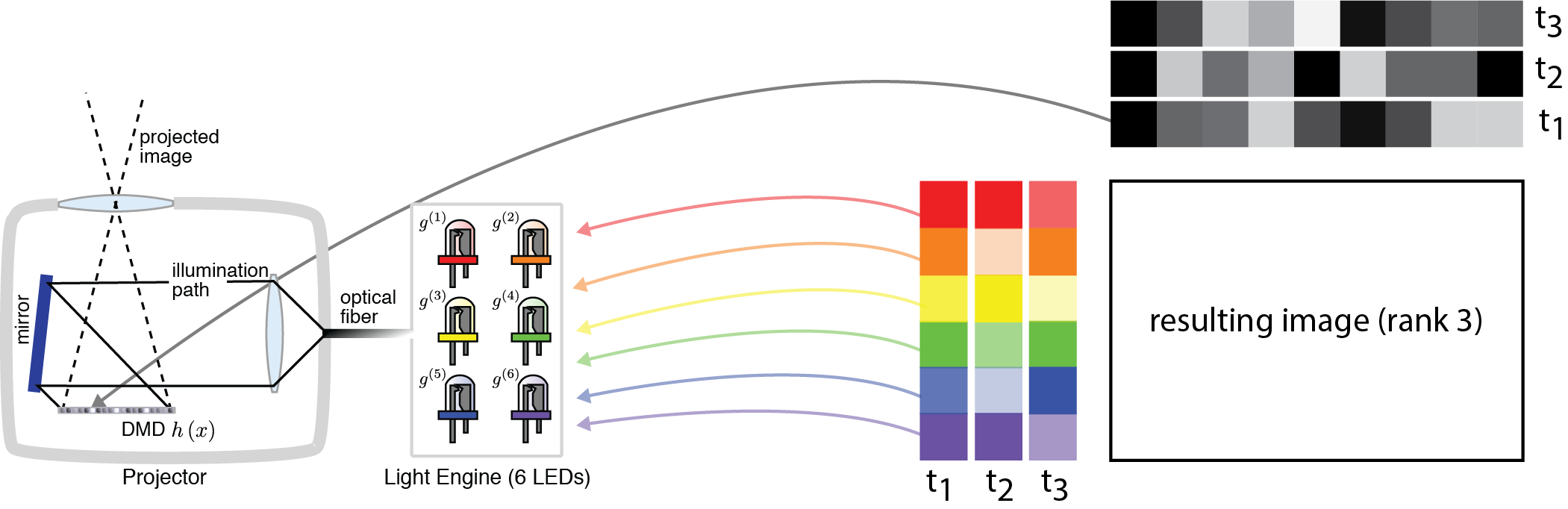

Fundamental display characteristics are constantly being improved, especially resolution, dynamic range, and color reproduction. However, whereas high resolution and high-dynamic range displays have matured as a technology, it remains largely unclear how to extend the color gamut of a display without either sacrificing light throughput or making other tradeoffs. In this paper, we advocate for adaptive color display; with hardware implementations that allow for color primaries to be dynamicallychosen, an optimal gamut and corresponding pixel states can be computed in a content-adaptive and user-centric manner. We build a flexible gamut projector and develop a perceptually-driven optimization framework that robustly factors a wide color gamut target image into a set of time-multiplexed primaries and corresponding pixel values. We demonstrate that adaptive primary selection has many benefits over fixed gamut selection and show that our algorithm for joint primary selection and gamut mapping performs better than existing methods. Finally, we evaluate the proposed computational display system extensively in simulation and, via photographs and user experiments, with a prototype adaptive color projector.