ABSTRACT

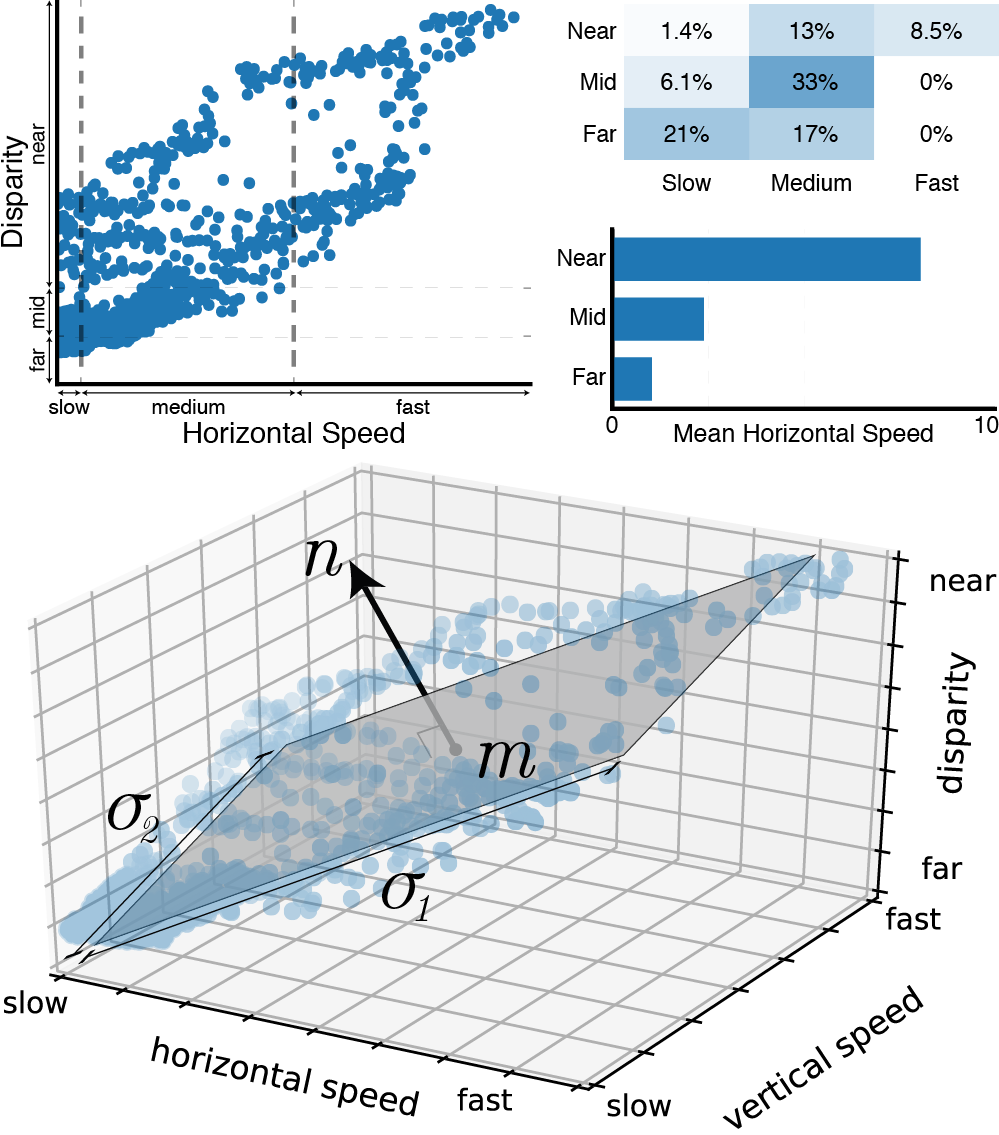

Virtual reality systems are widely believed to be the next major computing platform. There are, however, some barriers to adoption that must be addressed, such as that of motion sickness – which can lead to undesirable symptoms including postural instability, headaches, and nausea. Motion sickness in virtual reality occurs as a result of moving visual stimuli that cause users to perceive self-motion while they remain stationary in the real world. There are several contributing factors to both this perception of motion and the subsequent onset of sickness, including field of view, motion velocity, and stimulus depth. We verify first that differences in vection due to relative stimulus depth remain correlated with sickness. Then, we build a dataset of stereoscopic 3D videos and their corresponding sickness ratings in order to quantify their nauseogenicity, which we make available for future use. Using this dataset, we train a machine learning algorithm on hand-crafted features (quantifying speed, direction, and depth as functions of time) from each video, learning the contributions of these various features to the sickness ratings. Our predictor generally outperforms a naïve estimate, but is ultimately limited by the size of the dataset. However, our result is promising and opens the door to future work with more extensive datasets. This and further advances in this space have the potential to alleviate developer and end user concerns about motion sickness in the increasingly commonplace virtual world.