ABSTRACT

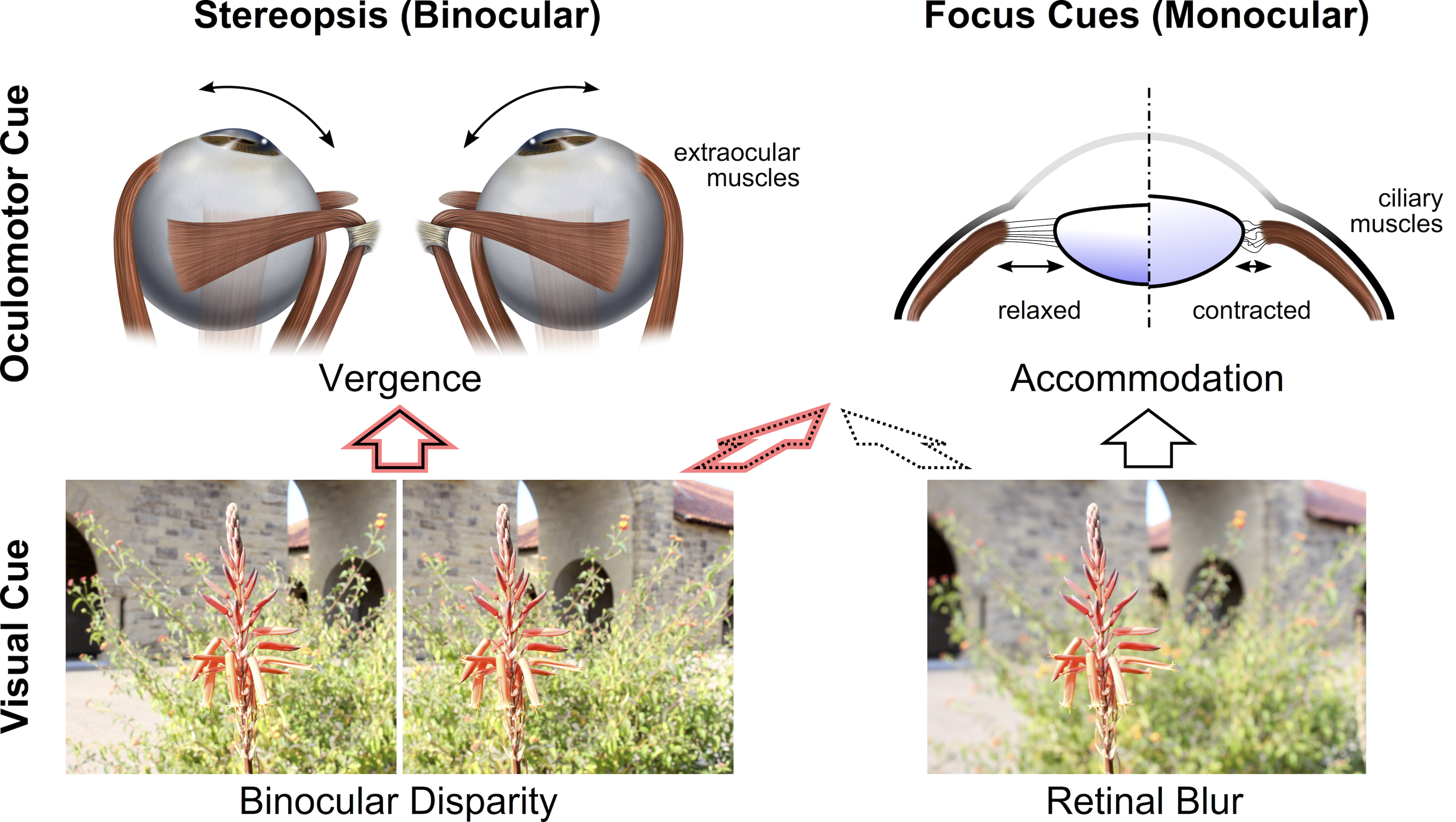

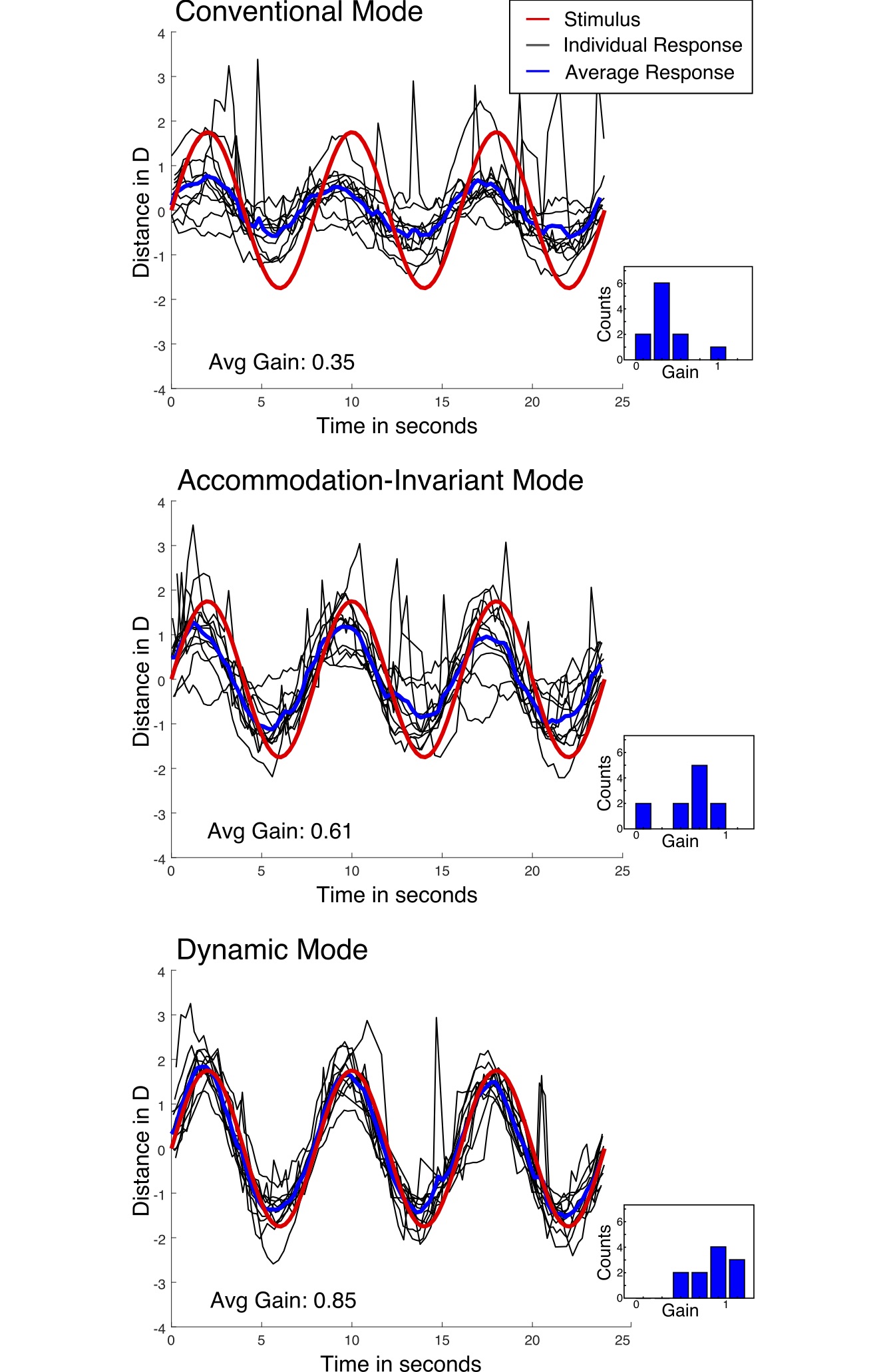

Although emerging virtual and augmented reality (VR/AR) systems can produce highly immersive experiences, they can also cause visual discomfort, eyestrain, and nausea. One of the sources of these symptoms is a mismatch between vergence and focus cues. In current VR/AR near-eye displays, a stereoscopic image pair drives the vergence state of the human visual system to arbitrary distances, but the accommodation, or focus, state of the eyes is optically driven towards a fixed distance. In this work, we introduce a new display technology, dubbed accommodation-invariant (AI) near-eye displays, to improve the consistency of depth cues in near-eye displays. Rather than producing correct focus cues, AI displays are optically engineered to produce visual stimuli that are invariant to the accommodation state of the eye. The accommodation system can then be driven by stereoscopic cues, and the mismatch between vergence and accommodation state of the eyes is significantly reduced. We validate the principle of operation of AI displays using a prototype display that allows for the accommodation state of users to be measured while they view visual stimuli using multiple different display modes.