ABSTRACT

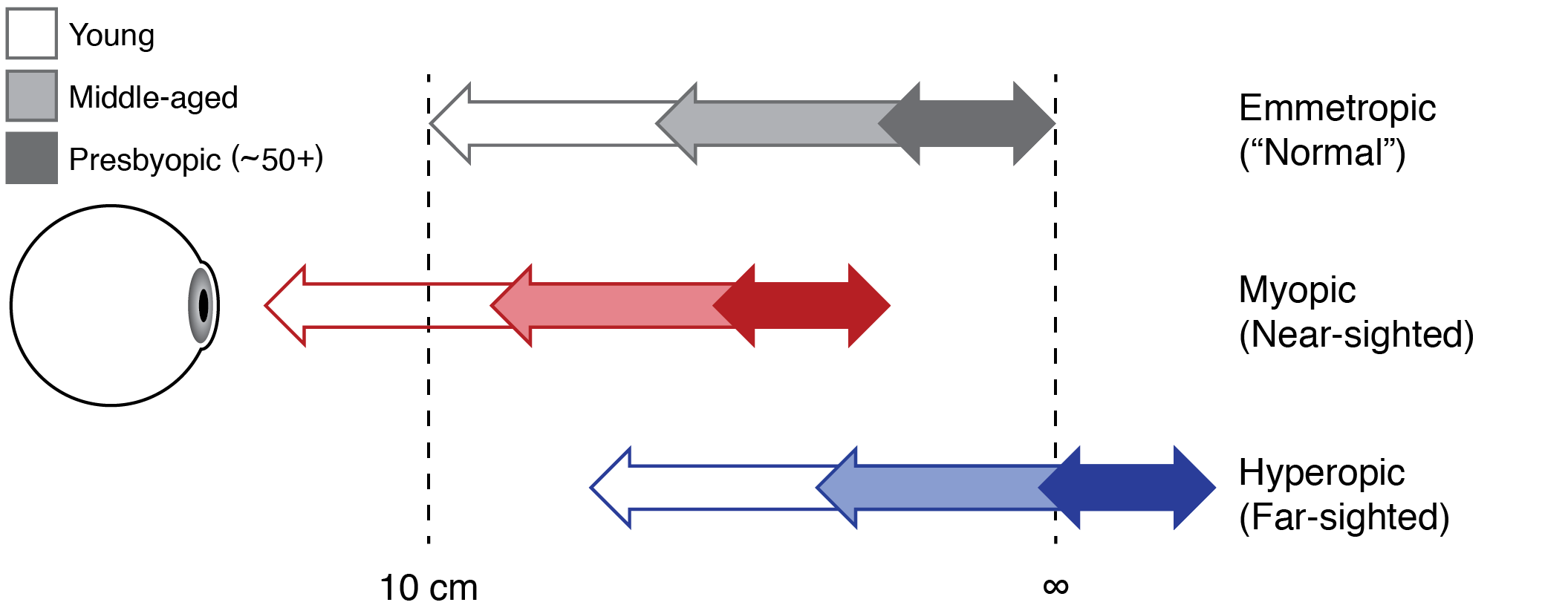

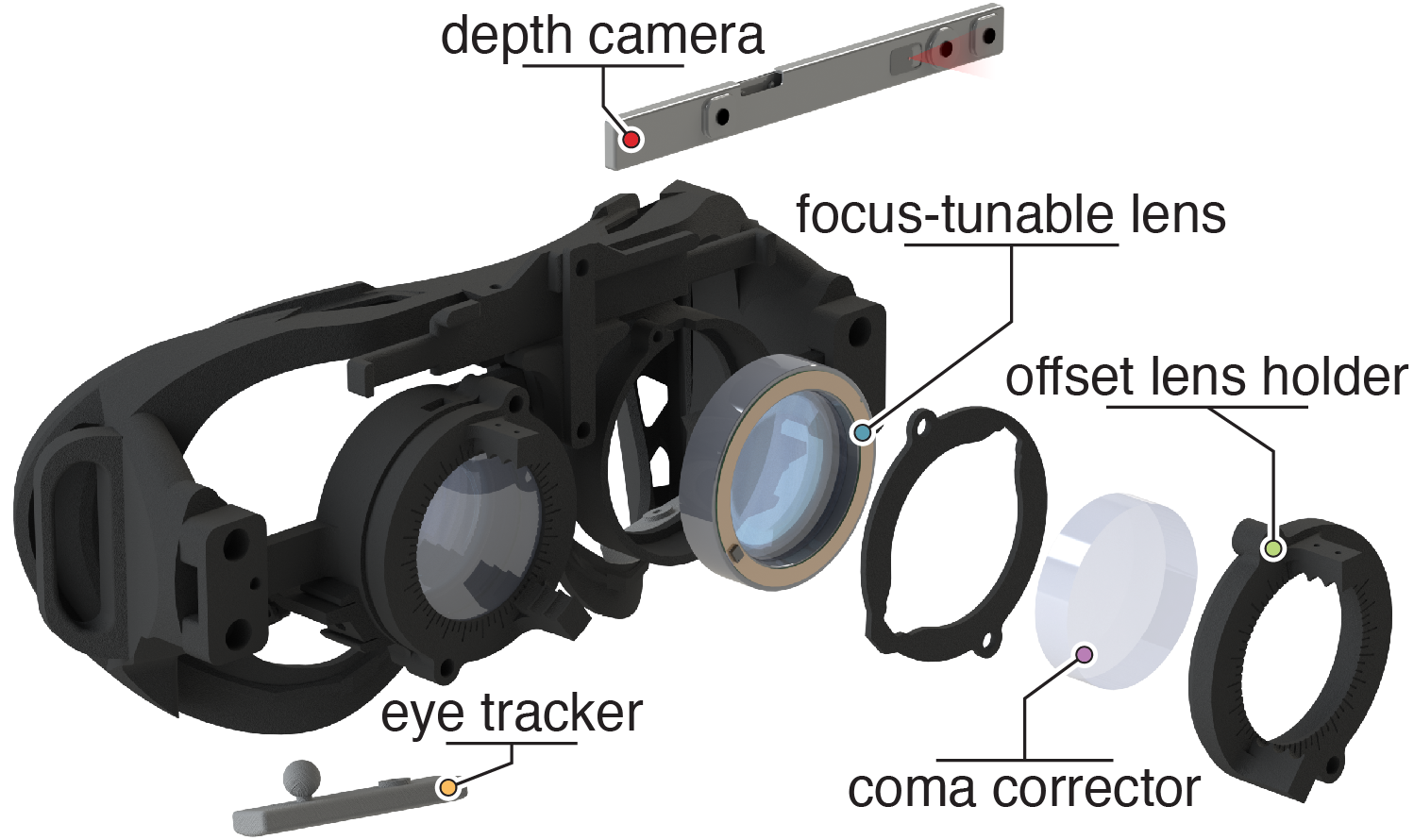

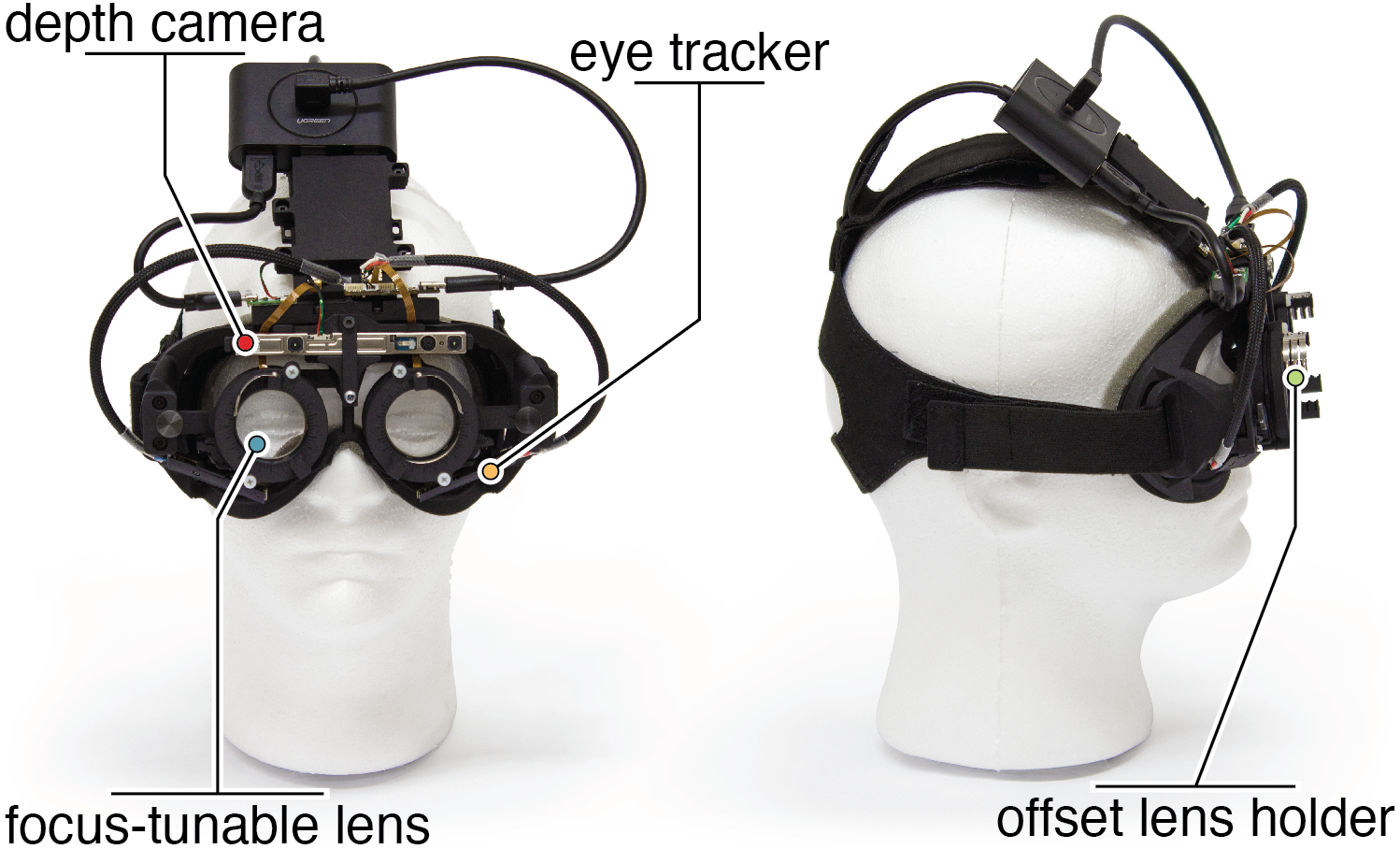

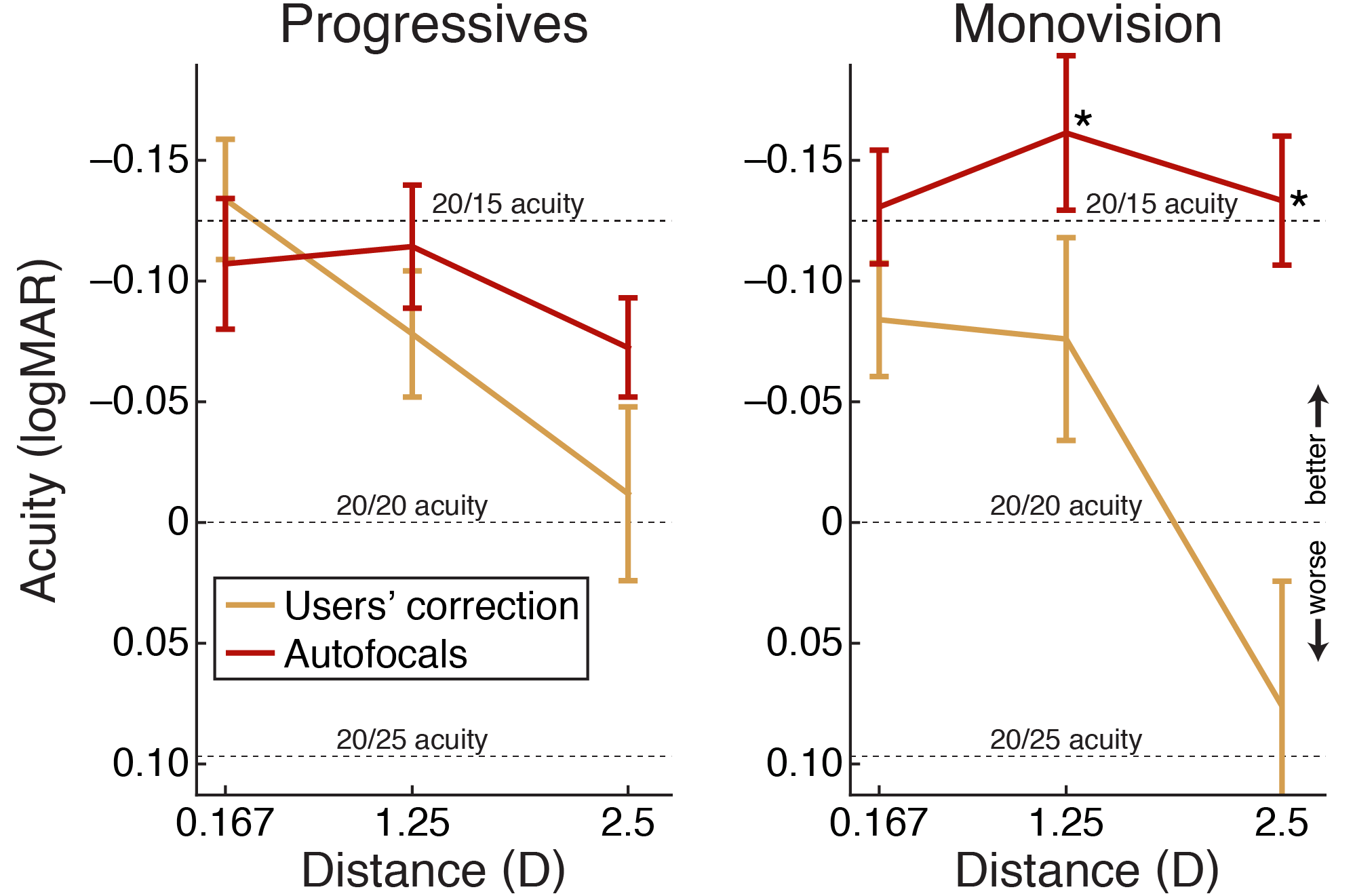

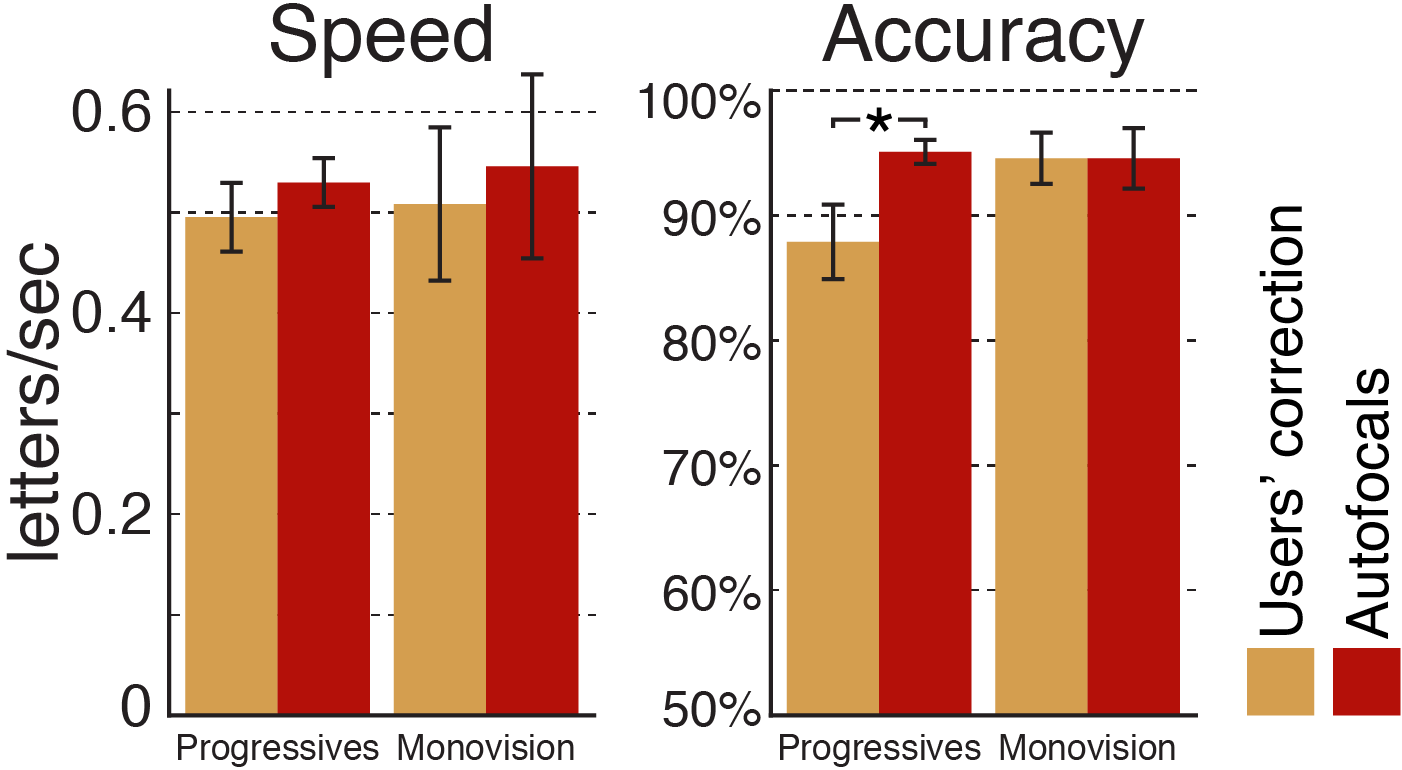

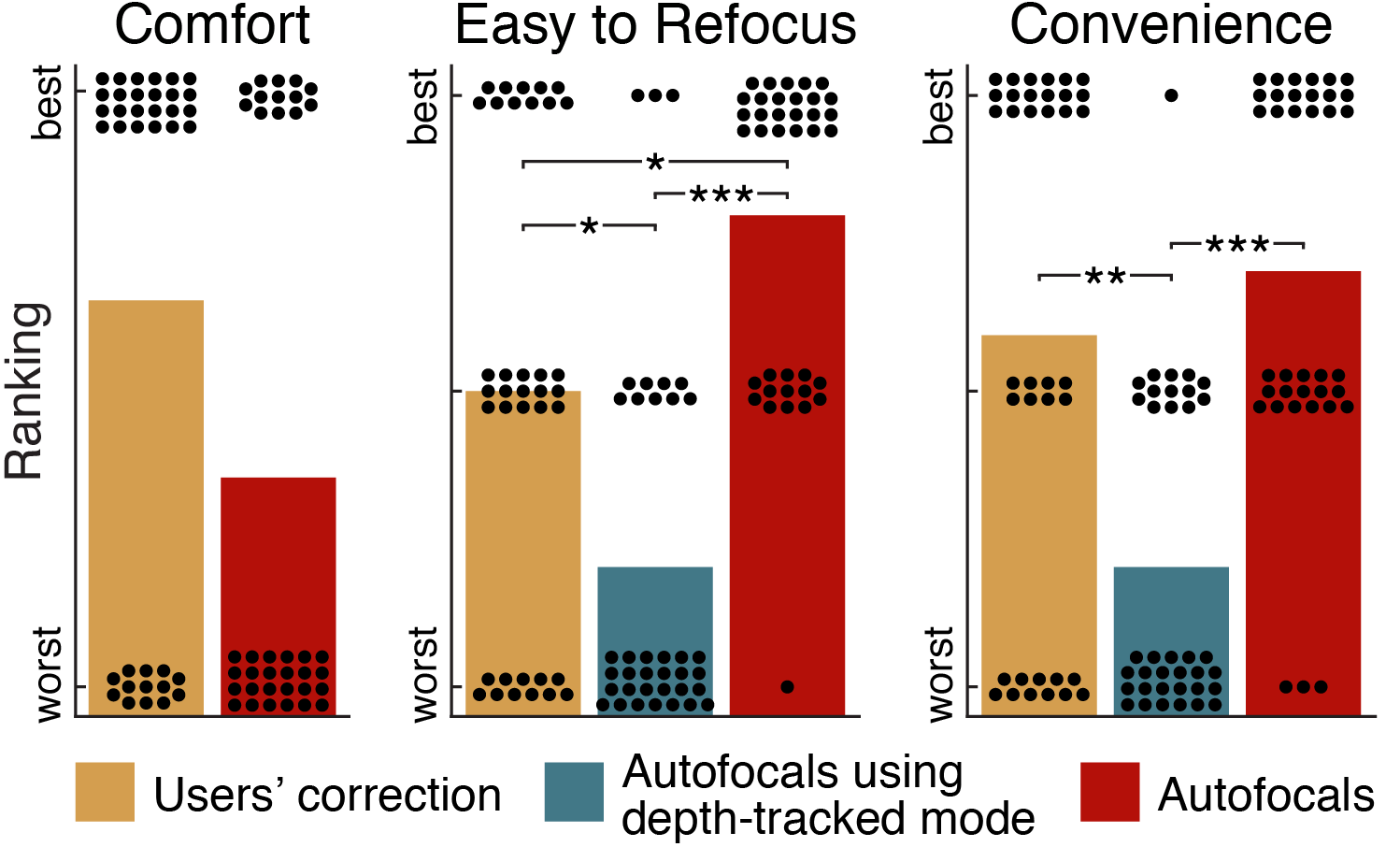

As humans age, they gradually lose the ability to accommodate, or refocus, to near distances because of the stiffening of the crystalline lens. This condition, known as presbyopia, affects nearly 20% of people worldwide. We design and build a new presbyopia correction, autofocals, to externally mimic the natural accommodation response, combining eye tracker and depth sensor data to automatically drive focus-tunable lenses. We evaluated 19 users on visual acuity, contrast sensitivity, and a refocusing task. Autofocals exhibit better visual acuity when compared to monovision and progressive lenses while maintaining similar contrast sensitivity. On the refocusing task, autofocals are faster and, compared to progressives, also significantly more accurate. In a separate study, a majority of 23 of 37 users ranked autofocals as the best correction in terms of ease of refocusing. Our work demonstrates the superiority of autofocals over current forms of presbyopia correction and could affect the lives of millions.

CITATION

N. Padmanaban, R. Konrad, G. Wetzstein, “Autofocals: Evaluating gaze-contingent eyeglasses for presbyopes”, Sci. Adv. 5, eaav6187 (2019).

BibTeX

@article{Padmanaban:2019:Autofocals,

title={Autofocals: Evaluating Gaze-Contingent Eyeglasses for Presbyopes},

author={Padmanaban, Nitish and Konrad, Robert and Wetzstein, Gordon},

journal={Science Advances},

year={2019},

volume={5},

number={6},

pages={eaav6187}

}

Acknowledgements

We would like to thank E. Wu, J. Griffin, and E. Peng for help with CAD, depth error characterization, and coma corrector fabrication; D. Lindell and J. Chang for helpful comments on an earlier draft of the manuscript; and A. Norcia for insightful discussions. N.P. was supported by the National Science Foundation (NSF) Graduate Research Fellowships Program. R.K. was supported by the NVIDIA Graduate Fellowship. G.W. was supported by an Okawa Research Grant, and a Sloan Fellowship. Other funding for the project was provided by NSF (award numbers 1553333 and 1839974) and Intel.