ABSTRACT

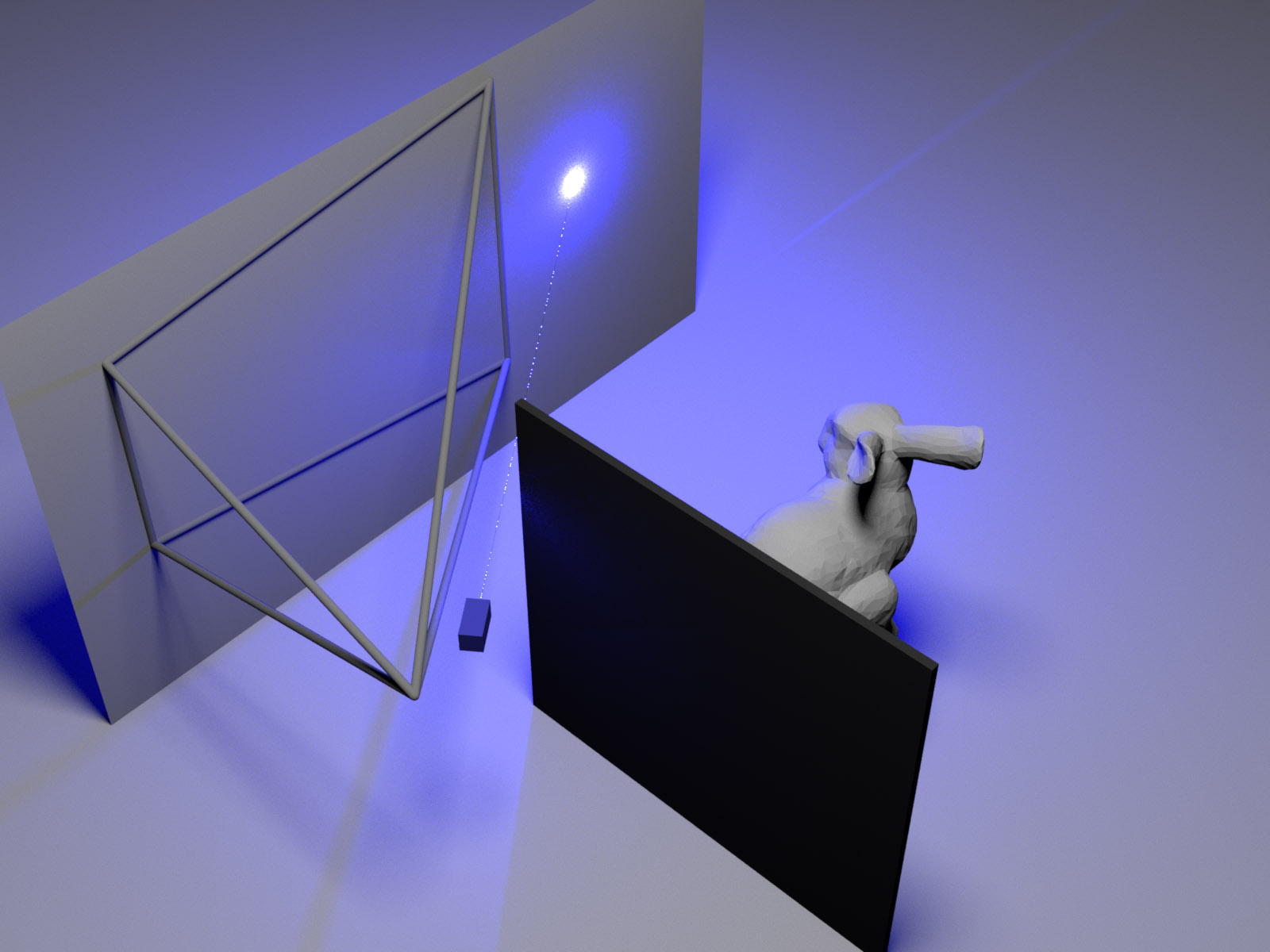

Imaging objects obscured by occluders is a significant challenge for many applications. A camera that could “see around corners” could help improve navigation and mapping capabilities of autonomous vehicles or make search and rescue missions more effective. Time-resolved single-photon imaging systems have recently been demonstrated to record optical information of a scene that can lead to an estimation of the shape and reflectance of objects hidden from the line of sight of a camera. However, existing non-line-of-sight (NLOS) reconstruction algorithms have been constrained in the types of light transport effects they model for the hidden scene parts. We introduce a factored NLOS light transport representation that accounts for partial occlusions and surface normals. Based on this model, we develop a factorization approach for inverse time-resolved light transport and demonstrate high-fidelity NLOS reconstructions for challenging scenes both in simulation and with an experimental NLOS imaging system.

FILES

- technical paper (link)

- technical paper supplement (link)

- SIGGRAPH 2019 presentation slides (below)

Datasets

Download the captured data here: link

It took a lot of effort to build and calibrate this hardware setup and to capture these data. Feel free to use our datasets in your own projects, but please acknowledge our work by citing the following papers:

- Matthew O’Toole, Felix Heide, David B. Lindell, Kai Zang, Steven Diamond, and Gordon Wetzstein. 2017. Reconstructing transient images from single-photon sensors. In Proc. CVPR. (link)

- Matthew O’Toole, David B. Lindell, and Gordon Wetzstein. 2018. Confocal non-line-of-sight imaging based on the light-cone transform. Nature 555, 7696, 338. (link)

- Felix Heide, Matthew O’Toole, Kai Zang, David B. Lindell, Steven Diamond, and Gordon Wetzstein. 2018. Non-line-of-sight Imaging with partial occluders and surface normals. ACM Trans. Graph. (link)

- David B. Lindell, Gordon Wetzstein, and Matthew O’Toole. 2019. Wave-based non-line-of-sight Imaging using fast f−k migration. ACM Trans. Graph. (SIGGRAPH) 38, 4, 116. (link)

CITATION

Felix Heide, Matthew O’Toole, Kai Zang, David B. Lindell, Steven Diamond, Gordon Wetzstein. 2019. Non-line-of-sight Imaging with Partial Occluders and Surface Normals. In ACM Trans. Graph. (SIGGRAPH)

BibTeX

@article{Heide:2019:OcclusionNLOS,

author = {Felix Heide and Matthew O’Toole and Kai Zang and David B. Lindell and Steven Diamond and Gordon Wetzstein},

title = {Non-line-of-sight Imaging with Partial Occluders and Surface Normals},

journal = {ACM Trans. Graph.},

year = {2019}

}