ABSTRACT

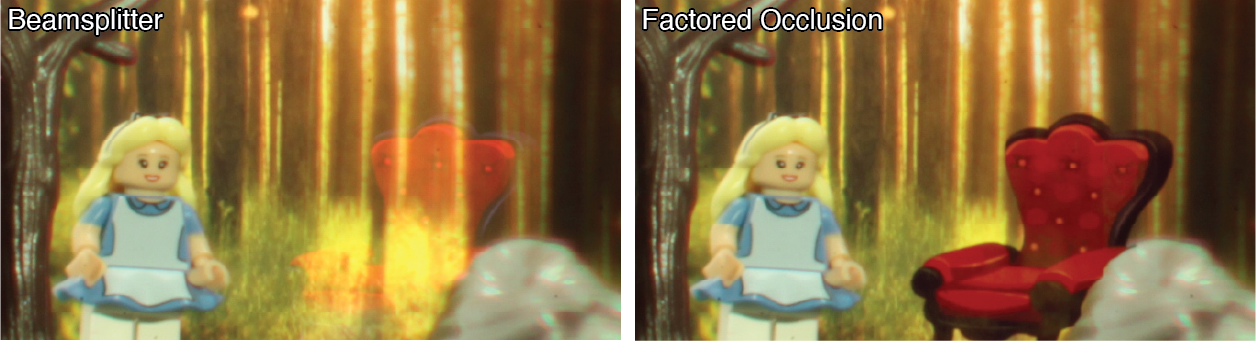

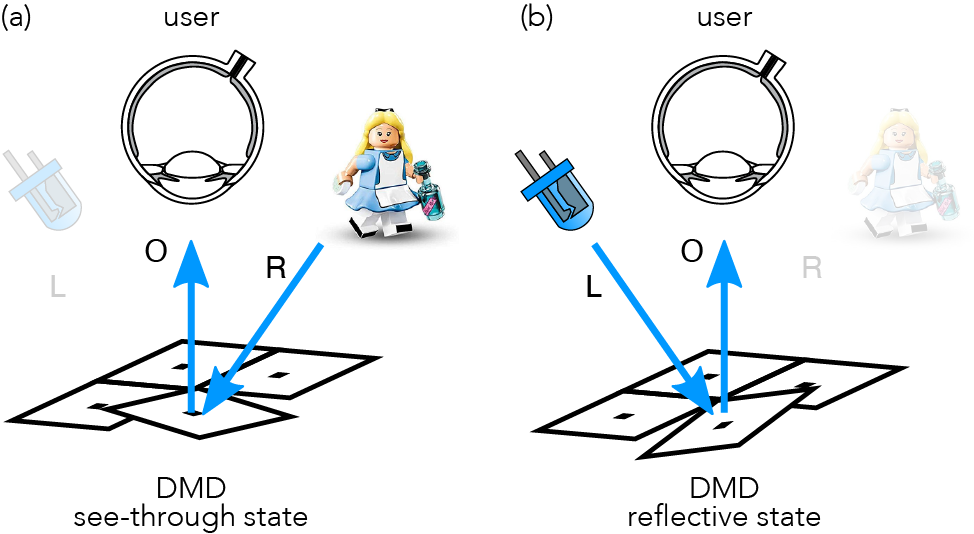

Occlusion is a powerful visual cue that is crucial for depth perception and realism in optical see-through augmented reality (OST-AR). However, existing OST-AR systems additively overlay physical and digital content with beam combiners – an approach that does not easily support mutual occlusion, resulting in virtual objects that appear semi-transparent and unrealistic. In this work, we propose a new type of occlusion-capable OST-AR system. Rather than additively combining the real and virtual worlds, we employ a single digital micromirror device (DMD) to merge the respective light paths in a multiplicative manner. This unique approach allows us to simultaneously block light incident from the physical scene on a pixel-by-pixel basis while also modulating the light emitted by a light-emitting diode (LED) to display digital content. Our technique builds on mixed binary/continuous factorization algorithms to optimize time-multiplexed binary DMD patterns and their corresponding LED colors to approximate a target augmented reality (AR) scene. In simulations and with a prototype benchtop display, we demonstrate hard-edge occlusions, plausible shadows, and also gaze-contingent optimization of this novel display mode, which only requires a single spatial light modulator.