FILES

CITATION

R. Konrad, E.A. Cooper, and G. Wetzstein. Novel Optical Configurations for Virtual Reality: Evaluating User Preference and Performance with Focus-tunable and Monovision Near-eye Displays. Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI’16)

BibTeX

@ARTICLE {Konrad:2016, author = "R. Konrad and E.A Cooper and G. Wetzstein", title = "Novel Optical Configurations for Virtual Reality: Evaluating User Preference and Performance with Focus-tunable and Monovision Near-eye Displays", journal = "Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI’16)", year = "2016" }

Acknowledgements

We thank Tom Malzbender, Bennett Wilburn, and Marty Banks for fruitful discussions. This project was generously supported by Intel, Meta, Huawei, and Google.

FAQ

Q: What are causes for discomfort in VR/AR?

A: There are several sources for discomfort in VR/AR experiences. The lack of vestibular cues (our sense of gravity), for example, leads to symptoms similar to motion sickness when we see fast motion in the virtual environment. The two cues, visual and vestibular, send conflicting signals to the brain. One cue tells the brain that we are in motion, the other one tells it that we are not. Another source of discomfort is the mismatch between vergence and accommodation cues. These are two different cues that our brain uses to sense depth, but only the vergence cues are accurately produced by current-generation VR/AR displays.

Whenever different sensory cues do not match, the brain “knows” that something is wrong. It seems that our brain has evolved into categorizing mismatching sensory cues as a possible source for poisoning. Our body’s natural response is what we summarize as sickness; symptoms range from nausea to diplopic vision, visual discomfort, fatigue, eyestrain, headaches, compromised image quality, and it may even lead to pathologies in the developing visual system of children.

Q: What is the vergence-accommodation conflict?

A: Much like how a camera can adjust its lens to bring objects at different distances into focus, people’s eyes have various muscles that are used to enable us to see clearly across a range of depths. During our day-to-day lives, these muscles work together constantly (and below our level of consciousness) to ensure that our vision is clear and in focus. To accomplish this, one set of muscles ensures that both of our eyes point at the same object (so that we don’t have double vision). This eye movement is called vergence. Another muscle, inside each eye, adjusts the focus of the eye’s lens so that this object is also in focus. This process is called accommodation.

The vergence-accommodation conflict refers to the fact that current-generation VR/AR displays can only correctly drive the vergence response as we explore a simulated environment, but not the accommodative response. Because the images are presented on screens at a fixed optical distance, accommodation to keep the images in focus has to stick to a fixed distance, whereas vergence to fixate different objects in depth varies.

It does not matter how close or far the screen is, so long as the magnified image of the screen is at a fixed optical distance and the simulated objects are at variable vergence distances, a conflict of our depth cues will result. The amount of the conflict, however, depends on the distance between this physical screen and the simulated objects that the user looks at. For example, in the case of the Oculus DK2 the screen is optically placed at around 1.3 m, and the strongest conflict is induced within 0.5 meters, essentially within arm’s reach. The screen appears at 1.3 m because you never look at the microdisplay directly but through the lens, which does not only magnify the image but also makes it appear at a larger distance.

Q: Why is it important provide correct focus cues and to overcome the vergence-accommodation conflict?

A: The benefits of providing correct or nearly correct focus cues not only include increased visual comfort, but also improvements in 3D shape perception, stereoscopic correspondence matching, and discrimination of larger depth intervals. Thus, significant efforts have been made to engineer focus-supporting displays. However, all technologies that can potentially support focus cues suffer from undesirable tradeoffs in compromising image resolution, device form factor, brightness, contrast, or other important display characteristics. These tradeoffs pose substantial challenges for high-quality image display with practical, wearable displays. No practical solution exists today!

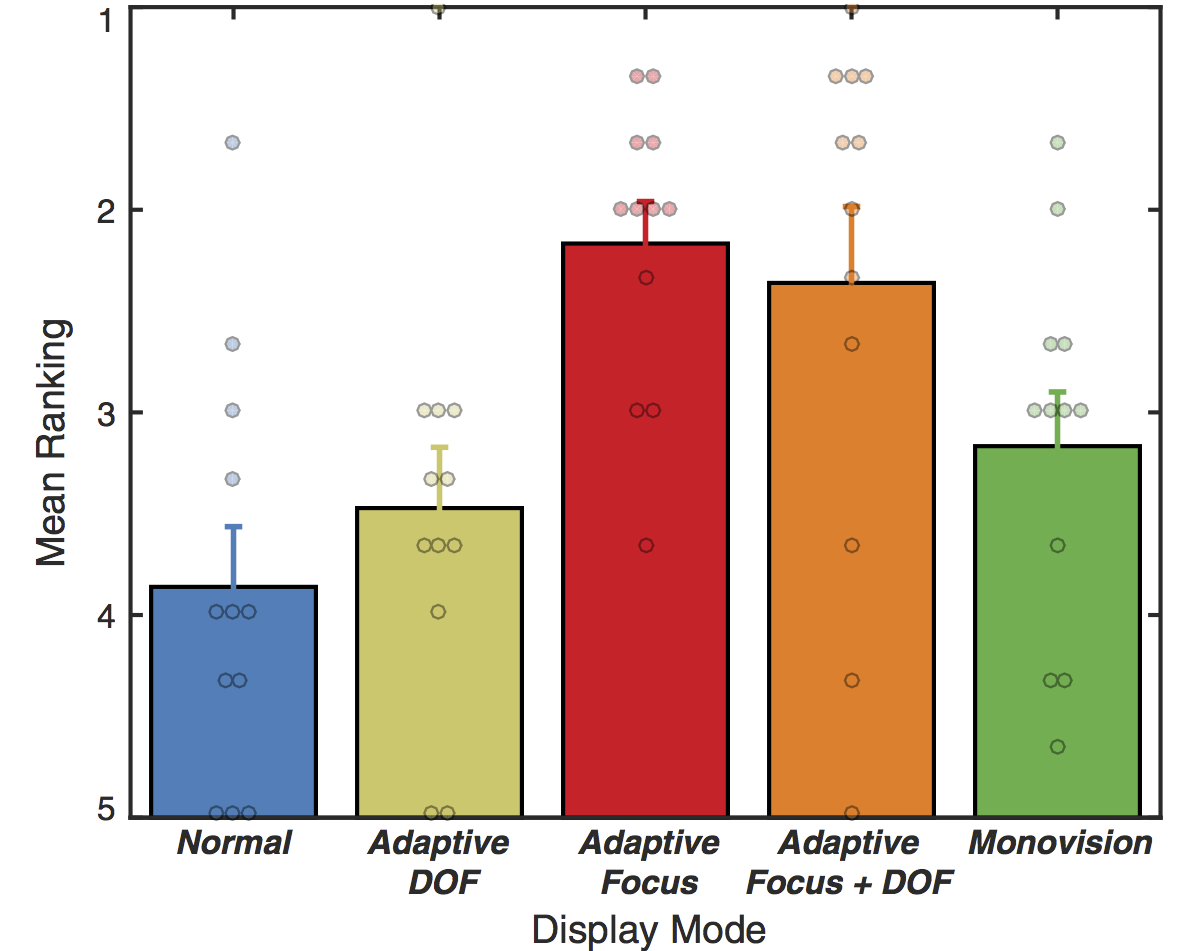

Q: What are the main insights of your study?

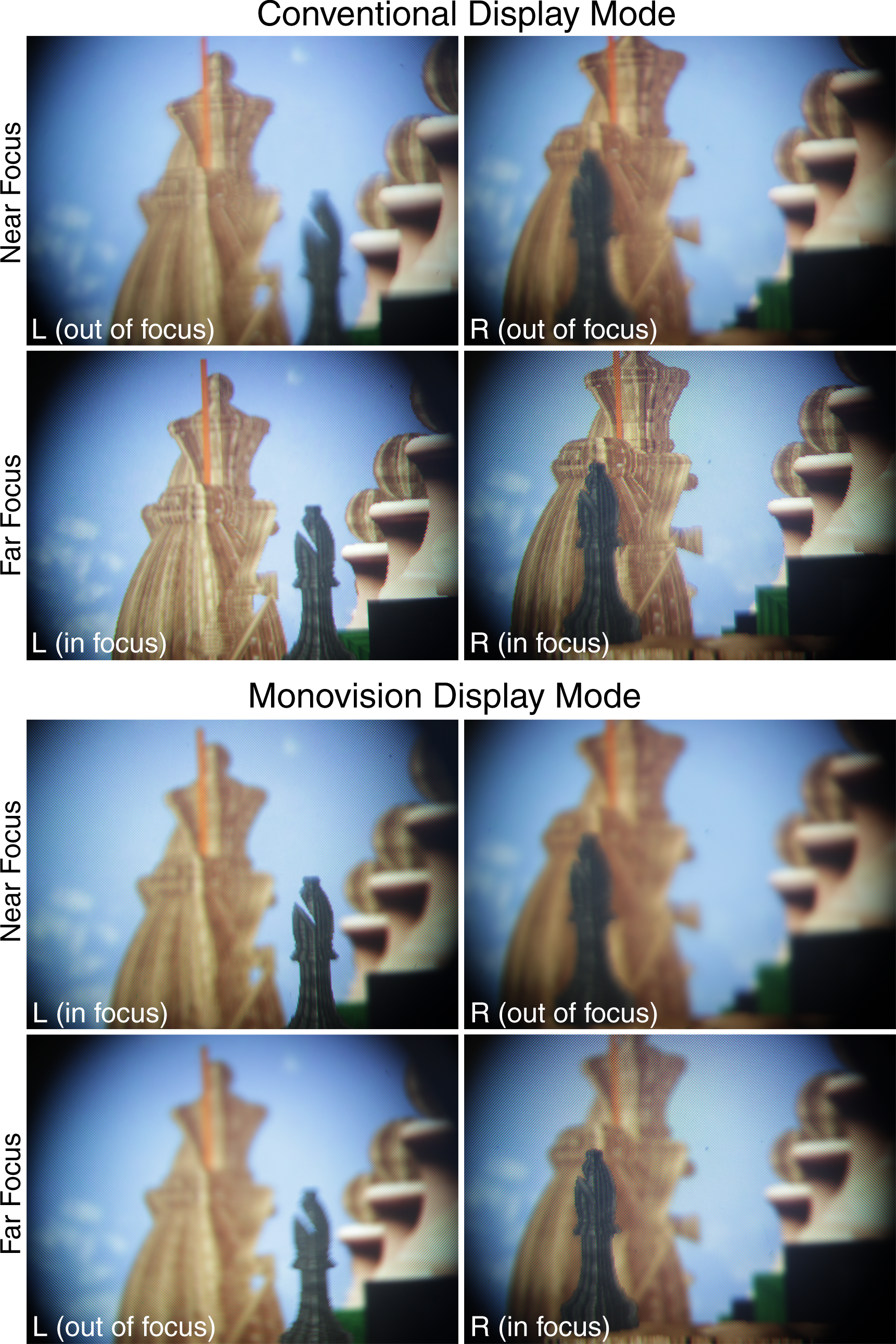

A: Our study combined experiments that use a new technology (focus-tunable optics) and an old technology (monovision). We also evaluated a software-only approach that renders the depth of field into the images so as to approximate retinal blur. Both the focus-tunable mode and the monovision mode demonstrate improvements over the conventional display, but both requite optical changes to existing VR/AR displays. A software-only solution (i.e. depth of field rendering) proved ineffective.

The focus-tunable mode provided the best gain over conventional VR/AR displays. We implemented it with focus-tunable optics (programmable liquid lenses), but it could also be implemented by actuating (physically moving) the microdisplay in the VR/AR headset. The amount of motion would only have to be a few mm and not very fast.

Q: How does this study inform next-generation VR/AR displays?

A: Based on our study, we make several recommendations to near-eye display manufacturers or hobbyists:

1. with conventional optical (magnifier) designs, you need to place the accommodation plane somewhere. VR consumer displays often place it at 1.3-1.7 m, but this distance really depends on your application. If you mostly look at virtual objects that are close, place the accommodation plane close. If you mostly look at objects that are far, place it far. If the content could be any distance, place it at 1.3-1.7 m.

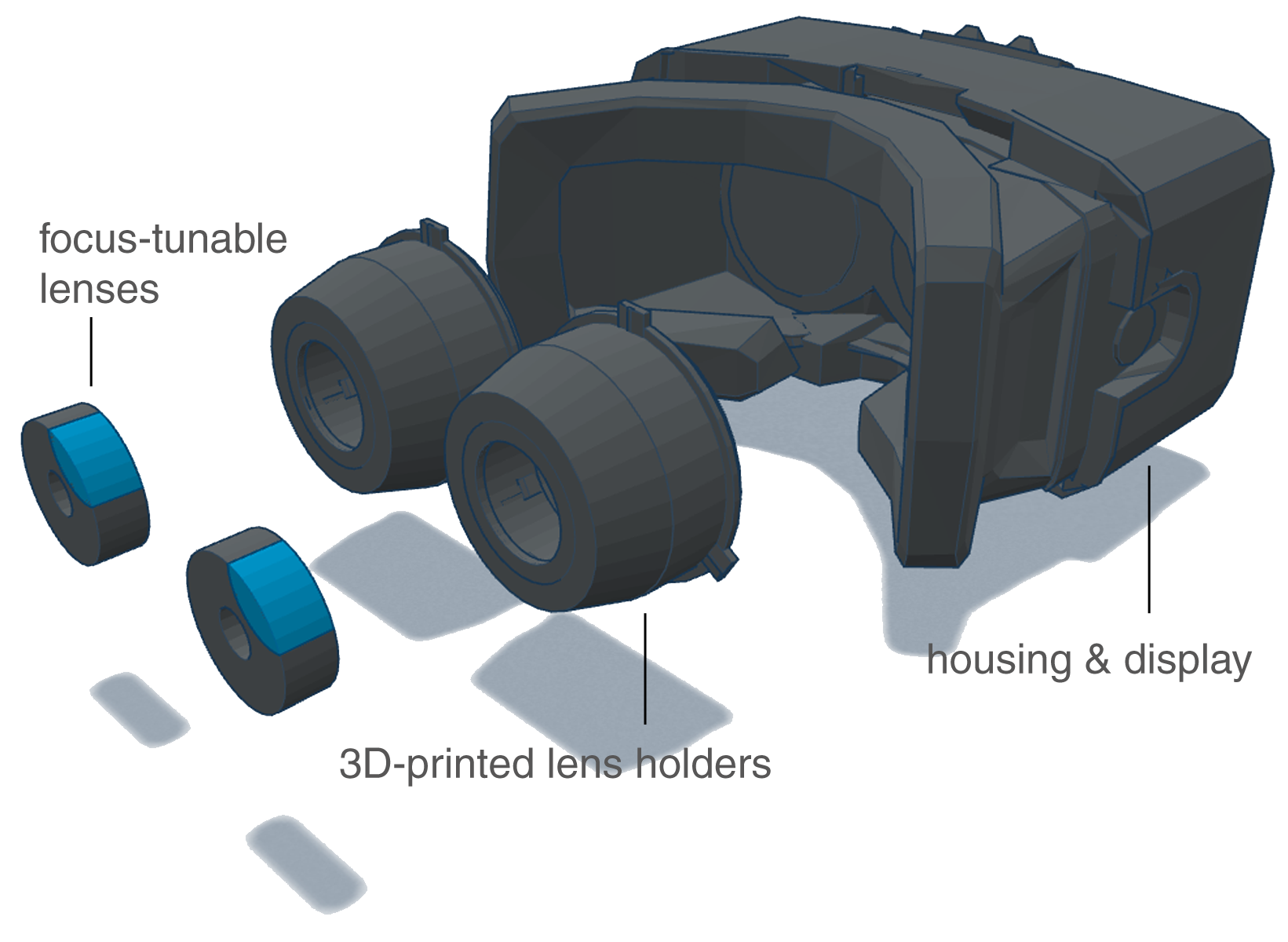

2. The focus-tunable display mode scored the best in our studies. If you can afford to dynamically change the accommodation plane depending on what the user is looking at – that would be great! We used focus-tunable lenses to achieve this, because they allow us to switch between different display modes easily which is great for user studies. In practice, you probably want to use actuated microdisplays, because focus-tunable lenses have a small diameter, which results in a small field of view, they have a lot of aberrations, and they are relatively heavy and power hungry. If you build a product, use actuated displays instead to achieve the same effect while maintaining field of view, image quality, etc.

3. If you have eye tracking (i.e. gaze tracking), dynamically adjust the accommodation plane depending on what the user is looking at. We call this mode gaze-contingent focus. With eye tracking in place, this would probably be the engineering solution of choice to achieve visually comfortable VR/AR experiences and plausible focus cues. You can either track the vergence angle of the eyes or the gaze of a single eye combined with the depth map value of that location in the computer-generated image.

4. Monovision does not require eye tracking and is much simpler to engineer than gaze-contingent focus – simply use two different lenses for each eye. It has shown to improve visual clarity and efficiency for some tasks, but this mode requires more long-term studies to find the right applications in VR/AR. An immediate application would be to support presbyopic viewers without having them wear their glasses or contact lenses, which is already a huge benefit for many people. We believe there are other applications for monovision as well, but this needs further investigation. One conclusive statement we can already make is that monovision doesn’t seem to be doing worse than the conventional mode, which is a good starting point for future research.

Q: What is the ultimate VR/AR display?

A: The “ultimate” goal for any VR/AR display would have to emit the full 4D light field corresponding to a physical scene into the viewer’s eye. The light field models the flow of light rays from the (virtual) scene through the viewer’s pupil and onto their retina. You can think about the light field as a “hologram”, but people usually refer to holograms as wavefronts that are created through diffraction whereas the light field is a somewhat more general term that is not attached to one particular technology.

An implementation of monovision on a device similar to a Google cardboard, illustrating the simplicity of the concept and implementation. It is enough to use a different lens in front each eye to gain the effect.

An implementation of monovision on a device similar to a Google cardboard, illustrating the simplicity of the concept and implementation. It is enough to use a different lens in front each eye to gain the effect.

Example screenshots from the user studies. For the user preference study (left), we ask users to fixate on a target moving periodically in depth within a static scene. The target shows a number with the user’s ranking of the current display mode, while the character is randomly associated with the display mode. For the visual clarity (center) and depth judgment (right) tasks, we display small targets that are magnified in the insets.

Example screenshots from the user studies. For the user preference study (left), we ask users to fixate on a target moving periodically in depth within a static scene. The target shows a number with the user’s ranking of the current display mode, while the character is randomly associated with the display mode. For the visual clarity (center) and depth judgment (right) tasks, we display small targets that are magnified in the insets.