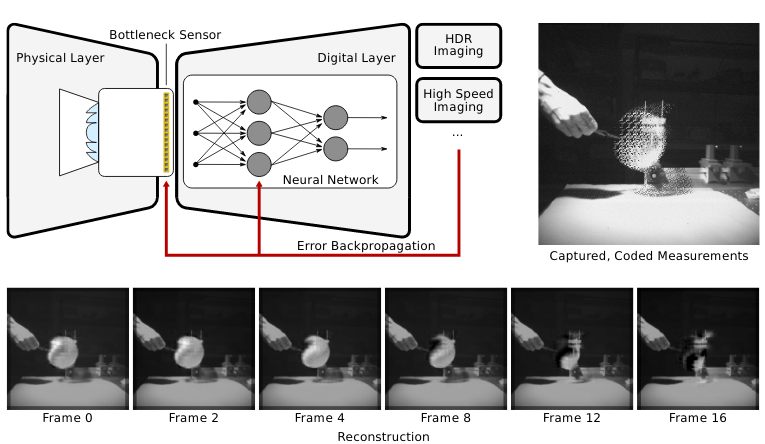

We propose the learning of the pixel exposures of a sensor, taking into account its hardware constraints, jointly with decoders to reconstruct HDR images and high-speed videos from coded images.

The pixel exposures and their reconstruction are jointly learnt in an end-to-end encoder–decoder framework. The learning is performed taking into account hardware constraints so that the learnt shutter functions can be compiled down and used in a real programmable sensor–processor. This page describes the following project presented at ICCP 2020 and

published in T-PAMI in July 2020.

ABSTRACT

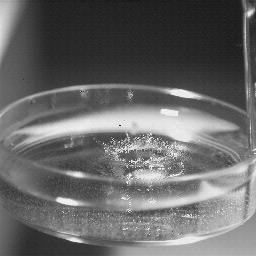

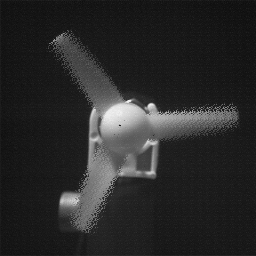

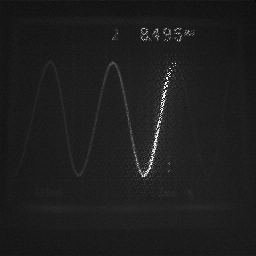

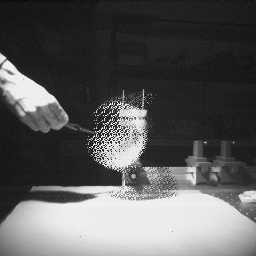

Camera sensors rely on global or rolling shutter functions to expose an image. This fixed function approach severely limits the sensors’ ability to capture high-dynamic-range (HDR) scenes and resolve high-speed dynamics. Spatially varying pixel exposures have been introduced as a powerful computational photography approach to optically encode irradiance on a sensor and computationally recover additional information of a scene, but existing approaches rely on heuristic coding schemes and bulky spatial light modulators to optically implement these exposure functions. Here, we introduce neural sensors as a methodology to optimize per-pixel shutter functions jointly with a differentiable image processing method, such as a neural network, in an end-to-end fashion. Moreover, we demonstrate how to leverage emerging programmable and re-configurable sensor–processors to implement the optimized exposure functions directly on the sensor. Our system takes specific limitations of the sensor into account to optimize physically feasible optical codes and we demonstrate state-of-the-art performance for HDR and high-speed compressive imaging in simulation and with experimental results.

T-PAMI 2020 ARTICLE

Files

- technical paper (pdf)

- supplement materials (pdf)

BibTeX

@article{Martel:2020:NeuralSensors,

author = {J.N.P. Martel and L.K. M\”{u}ller and S.J. Carey and P. Dudek and G. Wetzstein},

title = {{Neural Sensors: Learning Pixel Exposures for HDR Imaging and Video Compressive Sensing with Programmable Sensors}},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence},

volume = 42,

issue = 7,

pages = {1642–1653}

year = {2020},

}

Citation

J.N.P. Martel, L.K. Mueller, S.J. Carey, P. Dudek, G. Wetzstein, “Neural Sensors: Learning Pixel Exposures for HDR Imaging and Video Compressive Sensing with Programmable Sensors”, IEEE Transactions on Pattern Analysis and Machine Intelligence 42(7), 1642-1653. 2020, July.