ABSTRACT

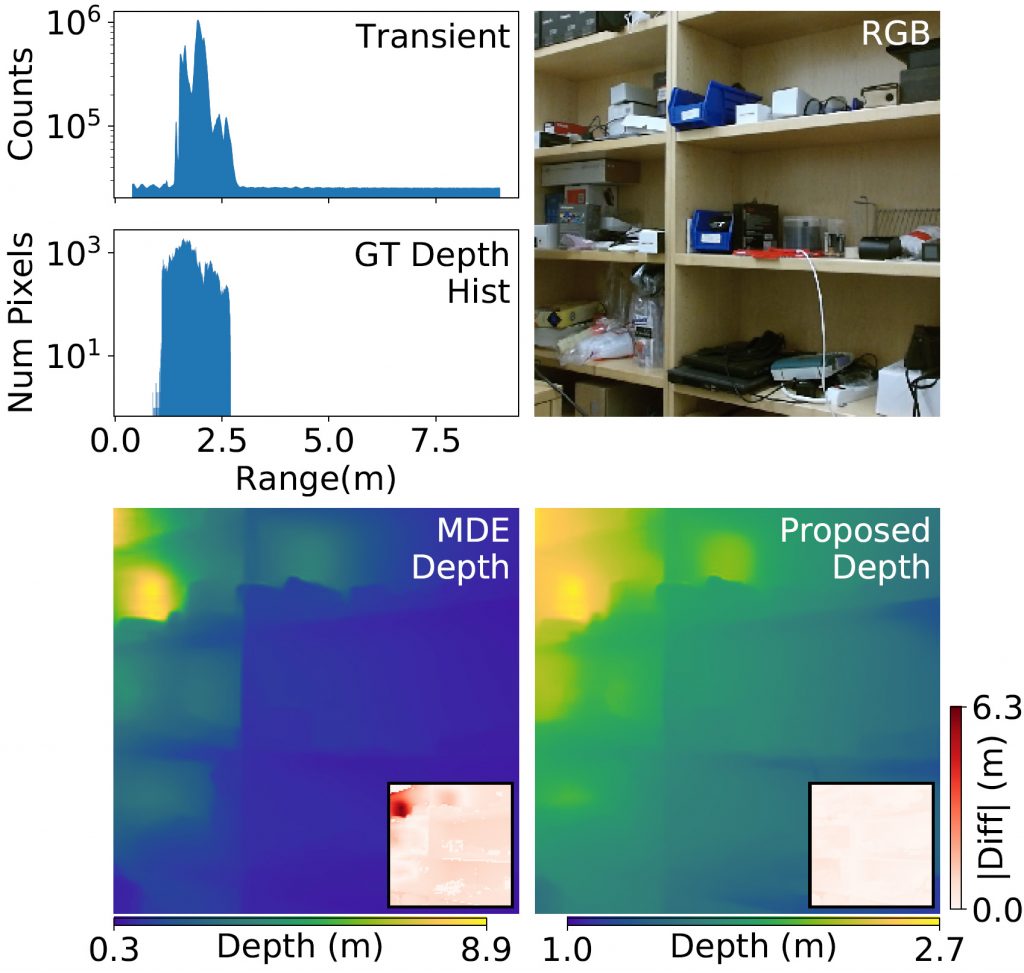

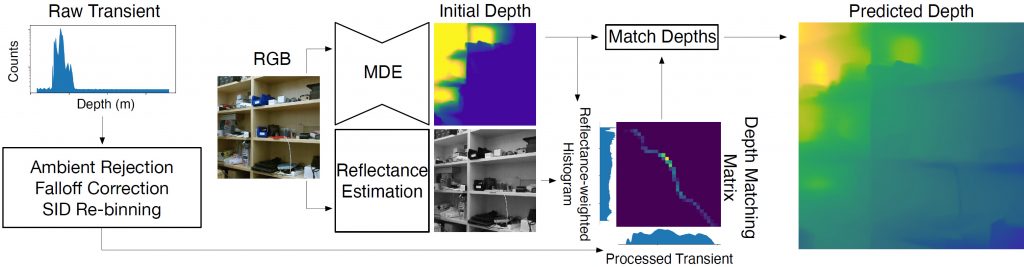

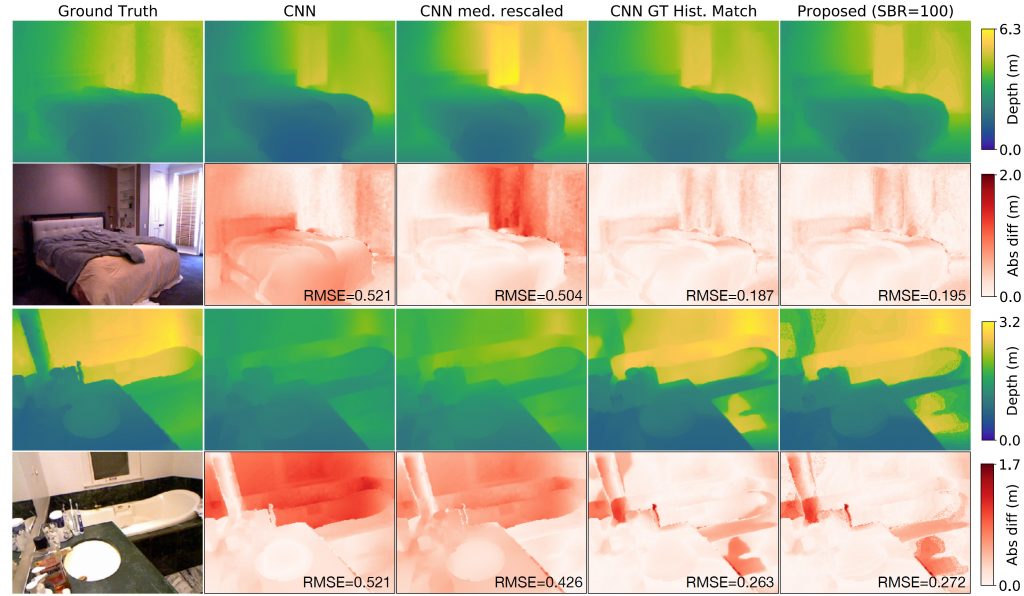

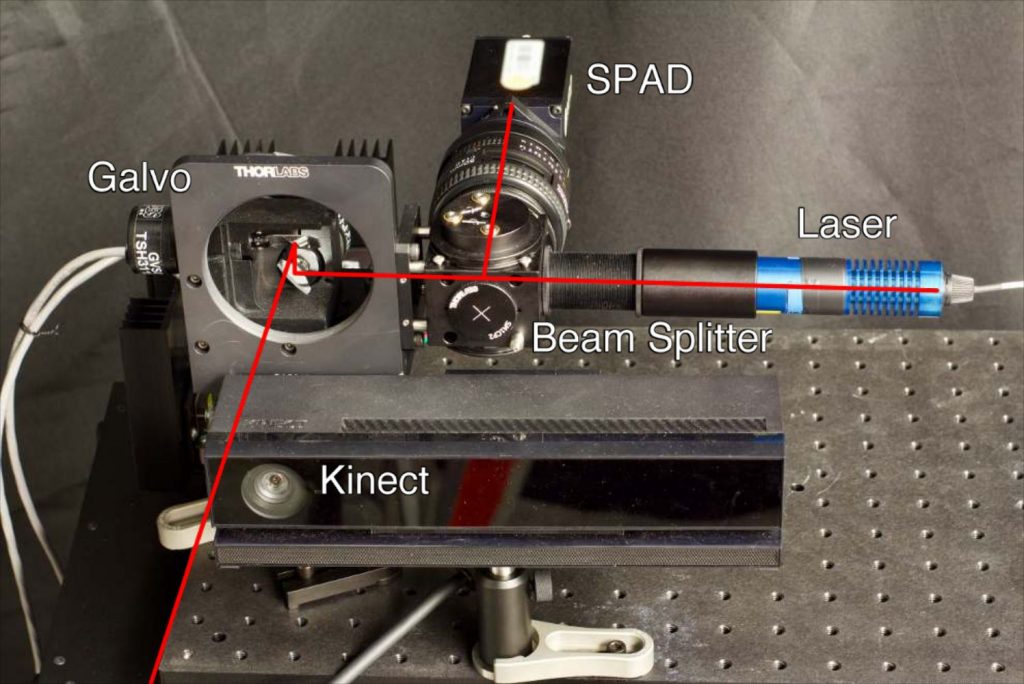

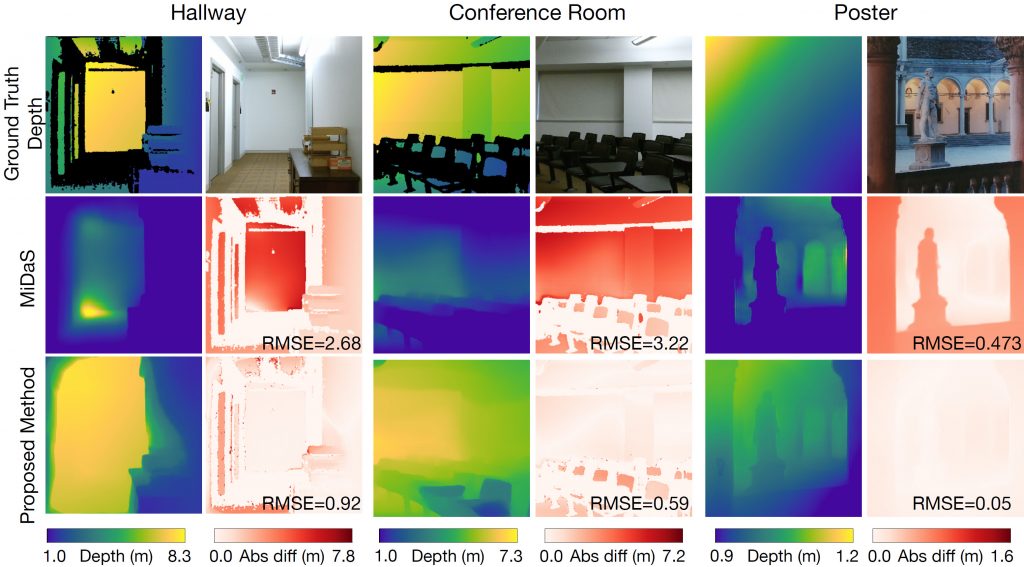

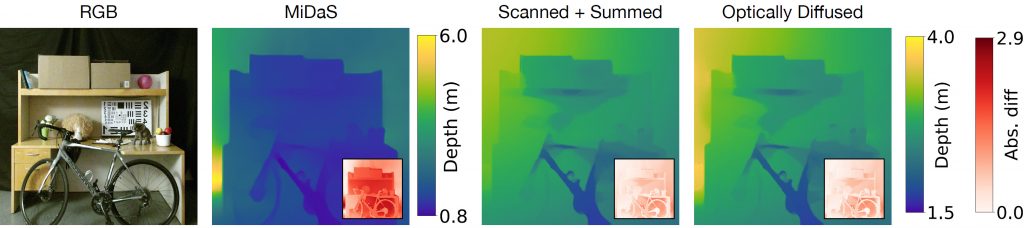

Monocular depth estimation algorithms successfully predict the relative depth order of objects in a scene. However, because of the fundamental scale ambiguity associated with monocular images, these algorithms fail at correctly predicting true metric depth. In this work, we demonstrate how a depth histogram of the scene, which can be readily captured using a single-pixel time-resolved detector, can be fused with the output of existing monocular depth estimation algorithms to resolve the depth ambiguity problem. We validate this novel sensor fusion technique experimentally and in extensive simulation. We show that it significantly improves the performance of several state-of-the-art monocular depth estimation algorithms.

FILES

CITATION

M. Nishimura, D. B. Lindell, C. Metzler, G. Wetzstein, “Disambiguating Monocular Depth Estimation with a Single Transient”, European Conference on Computer Vision (ECCV), 2020.

BibTeX

@article{Nishimura:2020,

author={M. Nishimura and D. B. Lindell and C. Metzler and G. Wetzstein},

journal={European Conference on Computer Vision (ECCV)},

title={{Disambiguating Monocular Depth Estimation

with a Single Transient}},

year={2020},

}

ACKNOWLEDGEMENTS

D.L. was supported by a Stanford Graduate Fellowship. C.M. was supported by an ORISE Intelligence Community Postdoctoral Fellowship. G.W. was supported by an NSF CAREER Award (IIS 1553333), a Sloan Fellowship, by the KAUST Office of Sponsored Research through the Visual Computing Center CCF grant, and a PECASE by the ARL.